We present a technique for synthesizing the effects of skin microstructure deformation by anisotropically convolving a highresolution displacement map to match normal distribution changes in measured skin samples. We use a 10-micron resolution scanning technique to measure several in vivo skin samples as they are stretched and compressed in different directions, quantifying how stretching smooths the skin and compression makes it rougher. We tabulate the resulting surface normal distributions, and show that convolving a neutral skin microstructure displacement map with blurring and sharpening filters can mimic normal distribution changes and microstructure deformations. We implement the spatially-varying displacement map filtering on the GPU to interactively render the effects of dynamic microgeometry on animated faces obtained from high-resolution facial scans.

Simulating the appearance of human skin is important for rendering realistic digital human

characters for simulation, education, and entertainment applications. Skin exhibits great

variation in color, surface roughness, and translucency over different parts of the body,

between different individuals, and when it's transformed by articulation and deformation.

But as variable as skin can be, human perception is remarkably attuned to the subtleties

of skin appearance, as attested to by the vast array of makeup products designed to

enhance and embellish it.

Advances in measuring and simulating the scattering of light beneath the surface of the

skin have made it possible to render convincingly realistic human characters whose skin

appear to be fleshy and organic. Today's high-resolution facial scanning techniques

(e.g. record facial geometry, surface coloration, and surface mesostructure details

at the level of skin pores and fine creases to a resolution of up to a tenth of a

millimeter. By recording a sequence of such scans or performing blendshape animation

using scans of different high-res expressions, the effects of dynamic mesostructure -

pore stretching and skin furrowing - can be recorded and

reproduced on a digital character.

Recently, recorded skin microstructure at a level of detail below a tenth of a millimeter

for sets of skin patches on a face, and showed that texture synthesis could be used to

increase the resolution of a mesostructure-resolution facial scan to one with microstructure

everywhere. They demonstrated that skin microstructure makes a significant difference in the

appearance of skin, as it gives rise to a face's characteristic pattern of spatially-varying

surface roughness. However, they recorded skin microstructure only for static patches from

neutral facial expressions, and did not record the dynamics of skin microstructure as skin

stretches and compresses.

Skin microstructure, however, is remarkably dynamic as a face makes different expressions.

Fig. 2 shows a person's forehead as they make surprised, neutral, and angry

expressions. In the neutral expression (center), the rough surface microstructure is

relatively isotropic. When the brow is raised (left), there are not only mesostructure

furrows but the microstructure also develops a pattern of horizontal ridges less than 0.1

mm across. In the perplexed expression (right), the knitted brow forms vertical anisotropic

structures in its microstructure. Seen face to face or filmed in closeup, such dynamic

microstructure is a noticeable aspect of human expression, and the anisotropic changes

in surface roughness affect the appearance of

specular highlights even from a distance.

Dynamic skin microstructure results from the epidermal skin layers being stretched and

compressed by motion of the tissues underneath. Since the skin surface is relatively stiff,

it develops a rough microstructure to effectively store a reserve of surface area to prevent

rupturing when extended. Thus, parts of the skin which stretch and compress significantly

(such as the forehead and around the eyes) are typically rougher than parts which are mostly

static, such as the tip of the nose or the top of the head. When skin stretches, the

microstructure flattens out and the surface appears less rough as the reserves of tissue

are called into action. Under compression, the microstructure bunches up, creating

micro-furrows which exhibit anisotropic roughness. Often, stretching in one dimension

is accompanied by compression in the perpendicular direction to maintain the area of

the surface or the volume of tissues below. A balloon provides a clear example of roughness

changes under deformation: the surface is diffuse

at first, and becomes shiny when inflated.

While it would be desirable to simulate these changes in appearance during facial animation,

curent techniques do not record or simulate dynamic surface microstructure for facial animation.

One reason scale: taking the facial surface to be 25cm X 25cm, recording facial shape at 10

micron resolution would require real-time Gigapixel imaging beyond the capabilities of today's

camera arrays. And simulating a billion triangles of skin surface, let alone several billion

tetrahedra of volume underneath, would be computationally

very expensive using finite element techniques.

In this work, we approximate the first-order effects of dynamic skin microstructure by

performing fast image processing on a highresolution skin microstructure displacement map

obtained as in. Then, as the skin surface deforms, we blur the displacement map along the

direction of stretching, and sharpen the displacement map along the direction of compression.

On a modern GPU, this can be performed at interactive rates, even for facial skin microstructure

at ten micron resolution. We determine the degree of blurring and sharpening by measuring in

vivo surface microstructure of several skin patches under a range of stretching and compression,

tabulating the changes in their surface normal distributions. We then choose the amount of blurring

or sharpening to affect a similar change in surface normal distribution on the microstructure

displacement map. While our technique falls short of simulating all the observable effects of

dynamic microstructure, it produces measurement-based changes in surface roughness and anisotropic

changes in surface microstructure orientation consistent with real skin deformation. For validation,

we compare renderings using our technique to real photographs of faces making similar expressions.

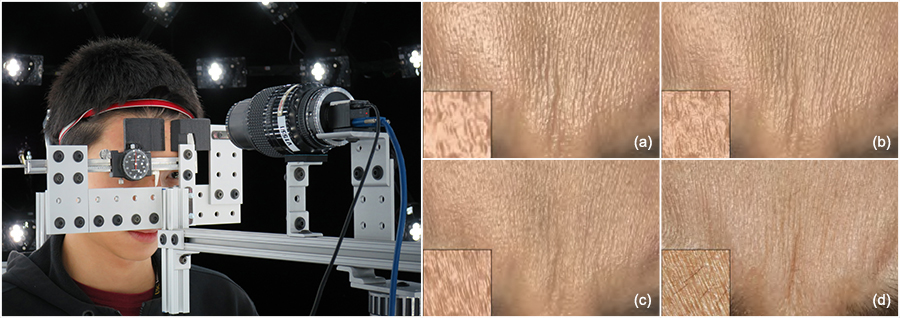

We record the surface microstructure of various skin patches at 10 micron resolution with a setup similar to which uses a set of differently lit photos taken with polarized gradient illumination. The sample patches are scanned in different deformed states using the lighting apparatus with a custom stretching measuring device consisting of a caliper and a 3D printed stretching aperture. The aperture of the patch holder is set 8 mm for the neutral deformation state and is set 30 cm away from a Ximea machine vision camera which records monochrome 2048 by 2048 pixel resolution images with Nikon 105 mm macro lens at f/16, so that each pixel covers a 6 micron square of skin. The 16 polarized spherical lighting conditions allow the isolation and measurement of specular surface normals, resulting in a per-pixel surface normal map. We integrate the surface normal map to compute a displacement map and use a high pass filter to remove surface detail greater than the scale of a millimeter to remove surface bulging.

Each skin patch, such as part of the forehead, cheek, or chin, is coupled to the caliper aperture using 3M double-sided adhesive tape, and each scan lasts about half a second. After performing the neutral scan, the calipers are narrowed by 0.8mm and the first compressed scan is taken; this continues until the skin inside the aperture buckles significantly. Then, the calipers are returned to neutral, and scans are taken with progressively increased stretching until the skin detaches from the double-stick tape. Fig. 3 shows a skin sample in five different states of strain. The calipers can be rotated to different angles, allowing the same patch of skin to be recorded in up to four different orientations, such as the forehead sample seen in Fig. 4.

With skin patch data acquired, we now wish to characterize how surface microfacet

distributions change under compression and stretching. After applying a denoising

filter to the displacement maps to reduce camera noise, we create a histogram of the

surface orientations observed across the skin patch under its range of strain. Several

such histograms are visualized in Fig. 4 next to their corresponding skin samples, and

can also be thought of the specular lobe which would reflect off the patch. As can be

seen, stretched skin becomes anisotropically shinier in the direction of the stretch,

and anisotropically rougher in the direction of compression. For some samples, such

as the chin in Fig. 4(g,h), we observed some dependence on the stretching direction

to the amount of change in normal distributions. However, we do not yet account for

the effect of the stretching direction in our model.

The variance in x and y of the surface normal distribution quantify the degree of

surface smoothing or roughening according to the amount of strain put on the sample.

Again, stretched skin becomes shinier, and compressed skin becomes rougher.

Fig. 5 shows frames from a sequence of a 1cm wide digitized skin patch being

deformed by an invisible probe. It uses a relatively low-resolution finite element volumetric

mesh with 25,000 tetrahedra to simulate the mesostructure which in turns drives dynamic microstructure

convolution. The neutral microstructure was recorded using the system in Fig. 1 (left) at

10 micron resolution from the forehead of a young adult male, and its microstructure is convolved with

parameters fit to match its own surface normal distribution changes under deformation as described in

Sec. 5. The rendering was made using the V-Ray package to simualte subsurface scattering. As seen in

the accompanying video, the skin microstructure bunches up and flattens out as the surface deforms

at a resolution much greater than the FEM simulation.

Fig. 1 highlights the effect of using no microgeometry, static microgeometry, and dynamic

microgeometry simulated using displacement map convolution with a real-time rendering. Rendering only

with 4K resolution mesostructure from a standard facial scan produces too polished an appearance at

this scale. Adding static microstructure computed at 16K resolution using a texture synthesis technique

increases visual detail but produces conflicting surface strain cues in the compressed and stretched

areas. Convolving the static microstructure according to the surface strain using normal distribution

curves from a related skin patch produces anisotropic skin microstructure consistent with the expression

deformation and a more convincing sense of skin under tension.

The authors would like to thank Randal Hill, Kimberly Lu, Ari Shapiro, Cary Peng, Bill Phelps, Emily O'Brien, Jay Busch, Xueming Yu, Etienne Danvoye, Javier von del Pahlen, the Digital Human League, Valerie Dauphin, and Kathleen Haase for their assistance and support. This research was sponsored by the U.S. Army Research Laboratory (ARL), the Funai Foundation for Information Technology, and in part by the National Science Foundation (CAREER-1055035, IIS-1422869) and the Sloan Foundation, and Royal Society Wolfson Research Merit Award. The content of the information does not necessarily reflect the position or the policy of the US Government, and no official endorsement should be inferred.