We present a practical framework for reproducing omnidirectional incident illumination conditions with complex spectra using an LED sphere with multispectral LEDs. For lighting acquisition, we augment standard RGB panoramic photography with one or more observations of a color chart. We solve for how to drive the LEDs in each light source to match the observed RGB color of the environment and to best approximate the spectral lighting properties of the scene illuminant. Even when solving for non-negative intensities, we show that accurate illumination matches can be achieved with as few as four or six LED spectra for the entire ColorChecker chart for a wide gamut of incident illumination spectra. A significant benefit of our approach is that it does not require the use of specialized equipment (other than the LED sphere) such as monochromators, spectroradiometers, or explicit knowledge of the LED power spectra, camera spectral response curves, or color chart reflectance spectra. We describe two useful and easy to construct devices for multispectral illumination capture, one for slow measurements of detailed angular spectral detail, and one for fast measurements with coarse spectral detail. We validate the approach by realistically compositing real subjects into acquired lighting environments, showing accurate matches to how the subject would actually look within the environments, even for environments with mixed illumination sources, and demonstrate real-time lighting capture and playback using the technique.

Lighting reproduction systems as in [Debevec et al. 2002; Hamon et al. 2014] surround

the subject with RGB color LEDs and drive them to match the lighting of the scene into

which the subject will be composited. The light is recorded as panoramic, high dynamic

range images, or rendered omnidirectionally from a global illumination lighting system.

While the results can be believable – especially under the stewardship of color correction

artists – it is not clear how accurate they are since only RGB colors are used for recording

and reproducing the illumination: there is significantly more detail across the visible

spectrum than what is being simulated. [Wenger et al. 2003] noted in particular that light

reproduced with RGB LEDs can produce unexpected color casts even when each light source

mimics the directly observable color of the original illumination. Ideally, a lighting

reproduction system could faithfully reproduce the appearance of the subject under any

combination of illuminants including incandescent, fluorescent, LED, and daylight, and

any filtered or reflected version of such lighting.

Recently, several efforts [Gu and Liu 2012; Ajdin et al. 2012; Kitahara et al. 2015]

have produced controllable LED spheres with more than just red, green, and blue LEDs

in each light source for purposes such as multispectral material reflectance measurement.

These systems add additional colors such as amber and cyan, as well as white LEDs which

use phosphors to broaden their emission across the visible spectrum. In this work, we

present a practical technique for driving the intensities of such arrangements of LEDs

to accurately reproduce the effects of real-world illumination environments with any

number of spectrally distinct illuminants in the scene. The practical nature of our

approach rests in that we do not require explicit spectroradiometer measurements of

the illumination; we require only traditional high dynamic range (HDR) panoramic

photography and one or more observations of a color chart reflecting different

directions of the illumination in the environment. Furthermore, we drive the LED

intensities directly from the color chart and HDR panoramas, with no need to explicitly

estimate illuminant spectra, or even to know the reflectance spectra of the color chart

samples or the spectral sensitivity functions of the cameras involved.

Our straightforward process is:

Step one is simple, and step four simply uses a nonnegative least squares solver. For step two, we present two assemblies for capturing multispectral lighting environments which trade spectral angular resolution for speed of capture; one assembly acquires unique spectral signatures for each lighting direction; the other permits video rate capture. For step three, we present a straightforward approach to fusing RGB panoramic imagery and directional color chart observations. The result is a relatively simple and visually accurate process for driving multispectral LED sphere lights to reproduce the spectrally complex illumination effects of real-world lighting environments. We demonstrate our approach by recording several lighting environments with natural and synthetic illumination and reproduce this illumination within an LED sphere with six distinct LED spectra. We show this enhanced lighting reproduction process produces accurate appearance matches for color charts and human subjects and can be extended to real-time multispectral lighting capture and playback.

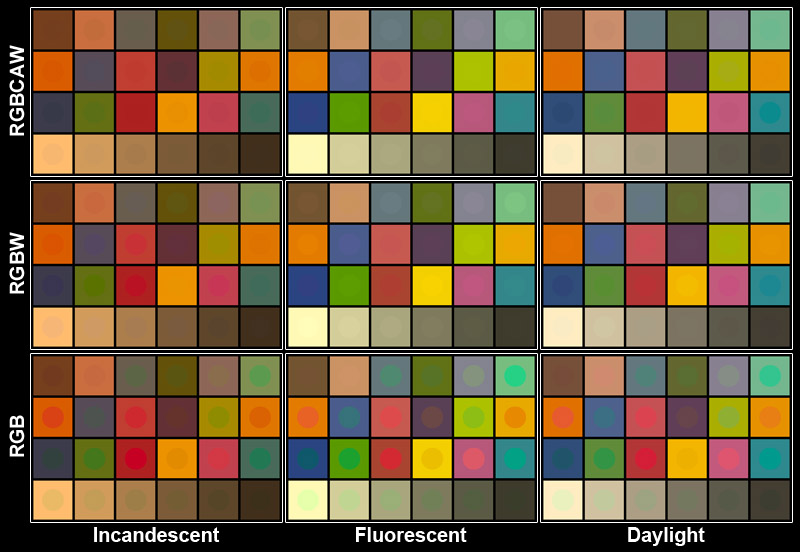

We used a Canon 1DX DLSR camera to photograph a Matte ColorChecker Nano chart from Edmund Optics, which includes 12 neutral grayscale squares and 18 color squares, under incandescent, fluorescent, and daylight illuminants. The background squares of the first row of Fig. 2 show the appearance of the color chart under the three illuminants, while the circles inside the squares show the appearance under the reproduced illumination using six LEDs (red, green, blue, cyan, amber, and white, or RGBCAW) for direct comparison. This yields charts which are very similar in appearance, to the point that many circles are difficult to see at all. The second two rows show the results of reproducing the illumination with just four (RGBW) or three (RGB) LED spectra. The RGBW matches are also quite good, but the RGB matches are generally poor, producing oversaturated colors which are easily distinguishable from the original appearance.

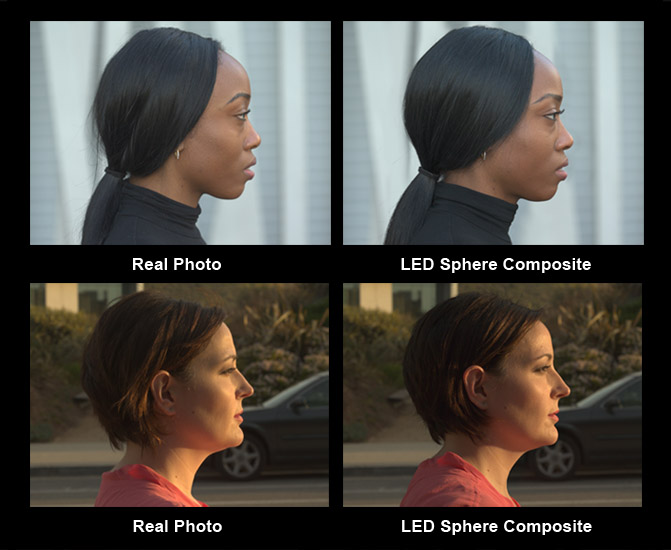

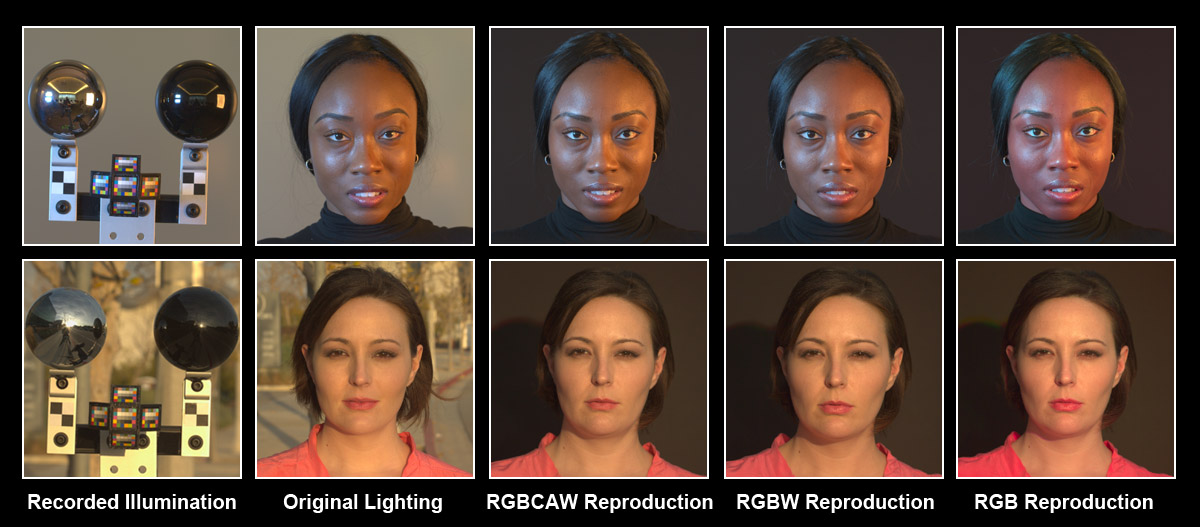

Fig. 3 shows two subjects in different lighting environments. The indoor environment

(top row) featured an incandescent soft box light to the subject's left, and spectrally

distinct blue-gelled white LED light panels to her right, with fluorescent office

lighting from the ceiling. The lighting was recorded using a five-color-chart and

reflective sphere capture technique. Then, as quickly as possible, the subject was

photographed in the same environment. Later, in the LED sphere, the lighting was

reproduced using 6-channel RGBCAW lighting, 4-channel RGBW lighting, and 3-channel

RGB lighting solves. Generally, the matches are visually very close for RGBCAW and

RGBW lighting reproduction, whereas colors appear too saturated using RGB lighting.

The fact that the RGBW lighting reproduction to performs nearly as well as RGBCAW

suggests that these four spectra may be sufficient for many lighting reproduction

applications. The bottom row features a sunset lighting condition reproduced with

RGBCAW lighting where the light of the setting sun was changing rapidly. We recorded

the illumination both before and after taking the pictures of the subject, and

averaged the two sets of color charts and RGB panoramas to solve

for the lighting reproduction condition.

We applied our technique applied to an image sequence with dynamic lighting, where

an actor is rolled through a set with fluorescent, incandescent, and LED lights with

various colored gels applied. We reconstructed the full dynamic range of the illumination

using the chrome and black sphere reflections and generated a dynamic RGBCAW lighting

environment using the same capture technique as in Fig. 3. The lighting was played in

the light stage as the actor repeated the performance in front of a green screen.

Finally, the actor was composited into a clean plate of the set. The lighting reproduction

result is presented in Video 1 (Dynamic Multispectral Lighting Reproduction). The real and

reproduced lighting scenarios are similar, although differences arise from discrepancies

in the actor’s pose and some spill light from the green screen, especially under the hat,

and some missing rim light blocked by the green screen. We ensured that the automated

matting technique did not alter the colors of the foreground element.

In this paper, we have presented a practical way to reproduce complex, multispectral lighting environments inside an LED sphere with multispectral light sources. The process is easy to practice, since it simply adds a small number of color chart observations to traditional HDR lighting capture techniques, and the only calibration required is to observe a color chart under each of the available LED colors in the sphere. The technique produces visually close matches to how the subject actually would look in the real lighting environments, even with as few as four LED spectra available (RGB and white), and can be applied to dynamic scenes. The technique may have useful applications in visual effects production, virtual reality, studio photography, cosmetics testing, and clothing design.