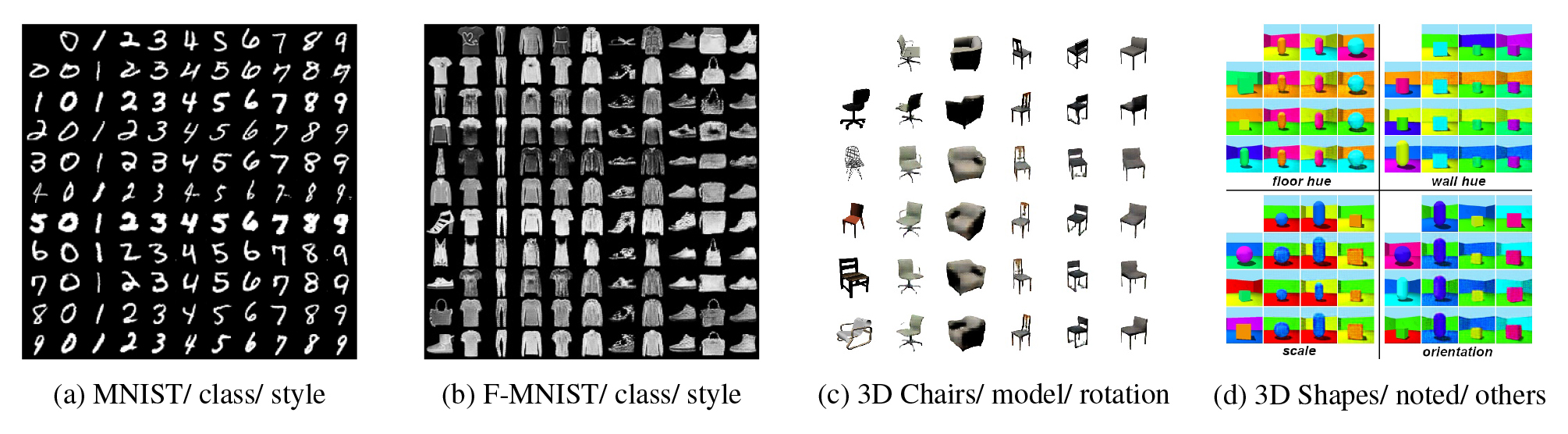

Disentangling data into interpretable and independent factors is critical for controllable generation tasks. With the availability of labeled data, supervision can help enforce the separation of specific factors as expected. However, it is often expensive or even impossible to label every single factor to achieve fully-supervised disentanglement. In this paper, we adopt a general setting where all factors that are hard to label or identify are encapsulated as a single unknown factor. Under this setting, we propose a flexible weakly-supervised multi-factor disentanglement framework DisUnknown, which Distills Unknown factors for enabling multi-conditional generation regarding both labeled and unknown factors. Specifically, a two-stage training approach is adopted to first disentangle the unknown factor with an effective and robust training method, and then train the final generator with the proper disentanglement of all labeled factors utilizing the unknown distillation. To demonstrate the generalization capacity and scalability of our method, we evaluate it on multiple benchmark datasets qualitatively and quantitatively and further apply it to various real-world applications on complicated datasets.

We empirically study how unknown distillation contributes

to the disentanglement of labeled factors and enables

control over the unknown factor.

Necessity of the Unknown Factor. Without the unknown

distillation, there is no guarantee that the features represented

by the unknown factor remain fixed when altering

any labeled ones. To compare, we modify Stage II by replacing

the unknown factor code encoded by E with Gaussian

noise and removing the feature matching loss ||μ−μ||2

(Eq. 5g), and train three models on 3D Shapes, with each selecting

floor hue, wall hue, and object hue as the unknown

factor, respectively. We generate images using the same

random code for the unknown factor and independentlysampled

random codes for all labeled factors, and then calculate

the ratio of results sharing the same unknown feature,

namely consistency ratio. Due to the simplicity of 3D

Shapes, these three features can be reliably computed by

taking the colors at fixed pixel coordinates. Two colors are

considered the same if their L2 RGB distance is less than

half of the mean distance between two adjacent hue samples

in the dataset. We generate 10,000 images for each network,

and show the results in Table 1. As can be seen, all ratios

reach 100% with distillation, meaning the unknown factor

remains unchanged for all test samples. Note that MIGs

are not measured here because the disentanglement performance

among labeled factors is generally not affected.

Scope of the Unknown Factor. In our setting, if there

is more than one unknown factor, all these factors will be

treated as a whole without individual controllability. However,

we can still ensure that the unknown factors are isolated

from the labeled ones, and the disentanglement performance

of the labeled factors will not be influenced. To

verify this, we train six models on 3D Shapes: starting all

factors labeled, we successively merge floor hue, orientation,

wall hue, scale, and shape into the unknown factor,

with object hue being the last labeled factor at the end. We

measure the consistency ratios as introduced in Necessity

of the Unknown Factor and MIG scores on object hue only

in Table 2. Note that all MIG scores are quite close to the

upper bound of 1, suggesting good disentanglement quality.

Choice of the Unknown Factor. We also study the robustness

of our method by choosing different factors as the

unknown one on 3D Shapes. The MSE and MIG results,

reflecting the consistent performance of reconstruction and

disentanglement, respectively, are shown in Table 3.

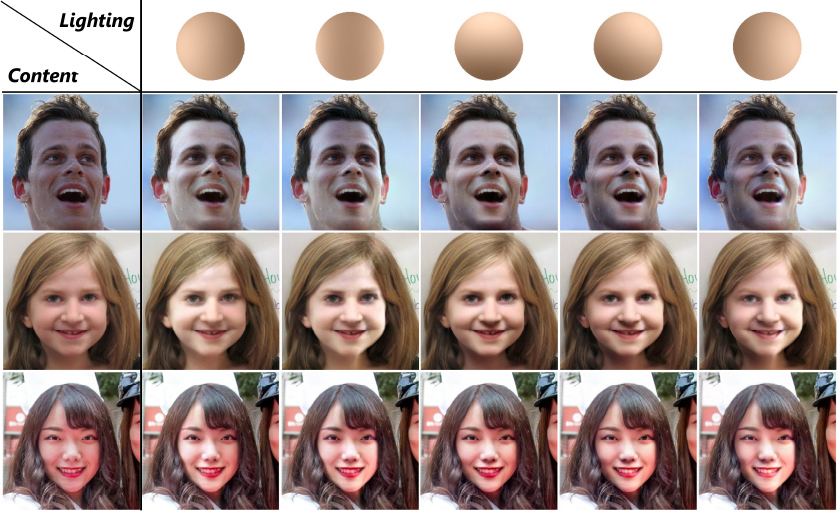

Portrait Relighting. We train the network on the dataset combining celebA-HQ [23] and FFHQ [24] by treating the lighting as the labeled factor and the remaining content as unknown. Here, lighting is represented by second-order spherical harmonics coefficients for RGB and estimated with [25, 6]. Figure 5 shows our portrait relighting results.

We propose DisUnknown, a weakly-supervised multifactor disentanglement learning framework. By distilling unknown factors, it enables independent control over each factor for multi-conditional generation. Our approach achieves state-of-the-art performance compared to existing unsupervised and weakly-supervised methods on multiple benchmark datasets. We further demonstrate its generalization capacity through various downstream tasks. Moreover, as a general framework, it can easily carry over to other modalities (e.g. text, audio) and help improve the stability of other tasks with our adversarial training strategies.