One of the "holy grails" of computer graphics is being able to create human faces

that look and act realistically. There are many levels of attaining this goal. A

digital photograph, for example, is a computer image of a human face that looks

real. For faces that act realistically, you can't beat a video camera. These

acquisition methods, however, are lacking for two reasons: they only record data

in two dimensions, and they are not controllable. If you make a video recording of

someone standing still reciting the alphabet, you can't then play it back and make

her sing "The Star Spangled Banner" while spinning in circles. Not as obvious, but

as important for applications such as computer-based special effects in movies,

there is no way to change the lighting after a photograph has been taken or a

scene has been filmed. This means, for example, that it is difficult to take a

photograph of a person standing in the desert on a sunny day and make it look like

the are standing indoors in a Cathedral in front of a stained glass window. Doing

so requires painstaking manual touchup by skilled artists, and it is still almost

impossible to make it look real.

One of the focuses of our work in the ICT Graphics Lab is to be able to acquire

3D models of humans that can be animated, relit, edited, and otherwise modified.

Our "killer app" would be a device in which a person sit, and after a brief "scan,"

yields a computer graphics model of their face that we could stick onto a video game

character's head, or composite into a movie frame for special effects shot. These

computer-generated images would be so realistic that they would be indistinguishable

from photographs. This level of realism is called Photo-realism.

Our prototype device for digitizing a human face is a rig called a lightstage.

Scanning a person in the lightstage is a two-phase process. In the first phase,

which lasts a few seconds, the lightstage photographs a human subject's face lit

from hundreds of different directions. This recovers the skin's reflectance

properties (how skin reflects light, its color, shininess, etc.) The second

phase uses a technique called active vision to recover a 3D geometric model

of the face (the shape). These two components, reflectance properties and geometry,

should be enough to reconstruct the appearance of a person with any light bouncing

off of them from any point of view.

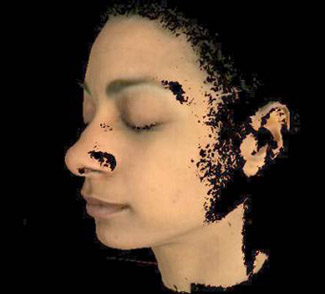

Active vision involves projecting patterns with a projector onto a subject. The face's

appearance under each pattern is recorded by a separate video camera. By analyzing the

images of the face with different patterns on it, active vision techniques obtain a 3D

model of the subject. One of the problems with using active vision technology to recover

high-resolution geometry is that the geometry tends to be very noisy. This noise is the

geometric equivalent of getting bad reception on your TV. It introduces sharp random

features like small ridges and valleys that are not present on the actual face. This

is the case with the image shown below on the left (A). We would like techniques that

allow us to recover higher quality geometric information, without all of this noise.

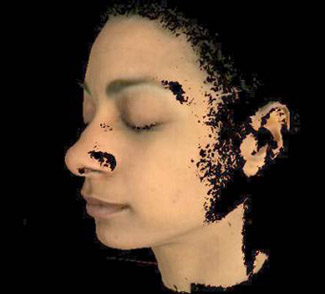

One approach is to use the reflectance properties we recover in order to improve the

geometric model. Using the reflectance data recorded by the lightstage and a technique

called photometric stereo, we are able to obtain an accurate estimate of the direction

that the surface of the skin is oriented at each point on the face. This data is called

a surface normal map or orientation map. We have developed a technique, called differential

displacement map recovery, to incorporate this normal map with the noisy range scan to

obtain very accurate geometric models of the face. It is efficient; typical processing

time for a high-res scan is 1-2 minutes, and it gives good results. Examples are shown

below in figures A -D. A forthcoming publication will fully describe this technique.

In addition to color information, it is crucial to be able to predict the color of the skin.

Skin has interesting and subtle color variations depending on the direction from which it is

viewed, the expression of the face, the oiliness of the skin surface, and how it is lit. For

this reason, a single photograph will often not suffice to be able to produce the types of

flexible digital facial models we are after. To fully understand and capture these color

variations, many researchers have developed complicated skin reflectance models and techniques

for recording skin color using different measurement devices. The techniques we use are explained

in the paper Acquiring the Reflectance Field of the Human Face, SIGGRAPH 2000.

Once we analyze this reflectance data, we are developing algorithms and tools for being able

to efficient re-render the face from any viewpoint in any environment. Eventually, we would

like to be able to reanimate the face as well. Examples of preliminary results are shown below,

Figures C-D. These images can be generated at over 30 frames per second on a high-end PC with

graphics hardware acceleration. Another prototype application, the Face Demo, also allows for

real-time viewing of a 2D image of the face in any environment.

Our main goal at the ICT Graphics Lab is to pursue basic research to enable more compelling and realistic virtual environments (of course, compelling and realistic are not necessarily the same thing.) A major component of this is realistic digital actors capable of expressing a wide range of emotions, expressions, performing actions, all while looking good. Applications of these techniques range from more exciting computer games, to better special effects in movies, to 3D video-phones, to medical visualization.