|

|

|

|

|

|

|

|---|---|---|---|---|---|---|

| (a) Capture Setting | (b) Input image | (c) Undistorted image | (d) Reference | (e) Input image | (a) Capture Setting | (g) Reference |

Near-range portrait photographs often contain perspective distortion artifacts that bias human perception and challenge both facial recognition and reconstruction techniques. We present the first deep learning based approach to remove such artifacts from unconstrained portraits. In contrast to the previous state-of-the-art approach, our method handles even portraits with extreme perspective distortion, as we avoid the inaccurate and error-prone step of first fitting a 3D face model. Instead, we predict a distortion correction flow map that encodes a per-pixel displacement that removes distortion artifacts when applied to the input image. Our method also automatically infers missing facial features, i.e. occluded ears caused by strong perspective distortion, with coherent details. We demonstrate that our approach significantly outperforms the previous stateof-the-art both qualitatively and quantitatively, particularly for portraits with extreme perspective distortion or facial expressions. We further show that our technique benefits a number of fundamental tasks, significantly improving the accuracy of both face recognition and 3D reconstruction and enables a novel camera calibration technique from a single portrait. Moreover, we also build the first perspective portrait database with a large diversity in identities, expression and poses.

The overview of our system is shown in Fig. 2. We pre-process the input portraits with background segmentation, scaling, and spatial alignment (see appendix), and then feed them to a camera distance prediction network to estimates camera-to-subject distance. The estimated distance and the portrait are fed into our cascaded network including FlowNet, which predicts a distortion correction flow map, and CompletionNet, which inpaints any missing facial features caused by perspective distortion. Perspective undistortion is not a typical image-to-image translation problem, because the input and output pixels are not spatially corresponded. Thus, we factor this challenging problem into two sub tasks: first finding a per-pixel undistortion flow map, and then image completion via inpainting. In particular, the vectorized flow representation undistorts an input image at its original resolution, preserving its high frequency details, which would be challenging if using only generative image synthesis techniques. In our cascaded architecture (Fig. 3), CompletionNet is fed the warping result of FlowNet. We provide details of FlowNet and CompletionNet in Sec. 4. Finally, we combined the results of the two cascaded networks using the Laplacian blending [1] to synthesize highresolution details while maintaining plausible blending with existing skin texture.

The distortion-free result will then be fed into the CompletionNet, which focuses on inpainting missing features and filling the holes. Note that as trained on a vast number of paired examples with large variations of camera distance, CompletionNet has learned an adaptive mapping regarding to varying distortion magnitude inputs.

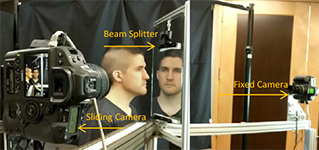

We have presented the first automatic approach that corrects the perspective distortion of unconstrained near-range portraits. Our approach even handles extreme distortions. We proposed a novel cascade network including camera parameter prediction network, forward flow prediction network and feature inpainting network. We also built the first database of perspective portraits pairs with a large variations on identities, expressions, illuminations and head poses. Furthermore, we designed a novel duo-camera system to capture testing images pairs of real human. Our approach significantly outperforms the state-of-the-art approach on the task of perspective undistortion with an accurate camera parameter prediction. Our approach also boosts the performance of fundamental tasks like face verification, landmark detection and 3D face reconstruction.