We present a novel method for acquisition, modeling, compression, and synthesis of

realistic facial deformations using polynomial displacement maps. Our method consists

of an analysis phase where the relationship between motion capture markers and detailed

facial geometry is inferred, and a synthesis phase where novel detailed animated facial

geometry is driven solely by a sparse set of motion capture markers. For analysis,

we record the actor wearing facial markers while performing a set of training expression clips.

We capture real-time high-resolution facial deformations, including dynamic wrinkle and

pore detail, using interleaved structured light 3D scanning and photometric stereo.

Next, we compute displacements between a neutral mesh driven by the motion capture

markers and the high-resolution captured expressions. These geometric displacements

are stored in a polynomial displacement map which is parameterized according to the

local deformations of the motion capture dots. For synthesis, we drive the polynomial

displacement map with new motion capture data. This allows the recreation of

large-scale muscle deformation, medium and fine wrinkles, and dynamic skin pore detail.

Applications include the compression of existing performance data and the synthesis

of new performances.

Our technique is independent of the underlying geometry capture system and can be

used to automatically generate high-frequency wrinkle and pore details on top of

many existing facial animation systems.

Currently, creating realistic virtual faces often involves capturing textures,

geometry, and facial motion of real people. However, it is difficult to capture

and represent facial dynamics accurately at all scales. Face scanning systems can

acquire high-resolution facial textures and geometry, but typically only for static

poses. Motion capture techniques record continuous facial motion, but only at a

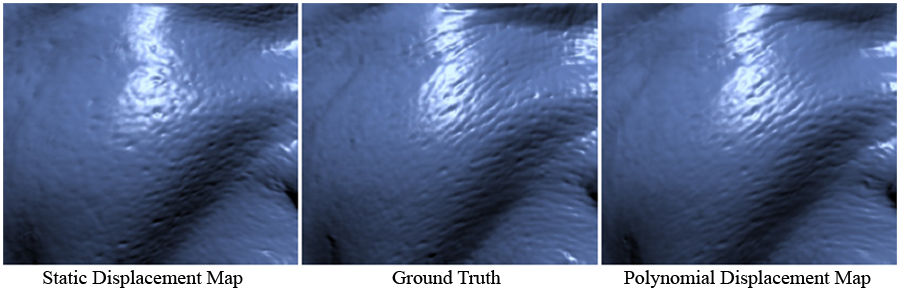

coarse level of detail. Straightforward techniques of driving high-resolution character

models by relatively coarse motion capture data often fails to produce realistic motion

at medium and fine scales (top image).

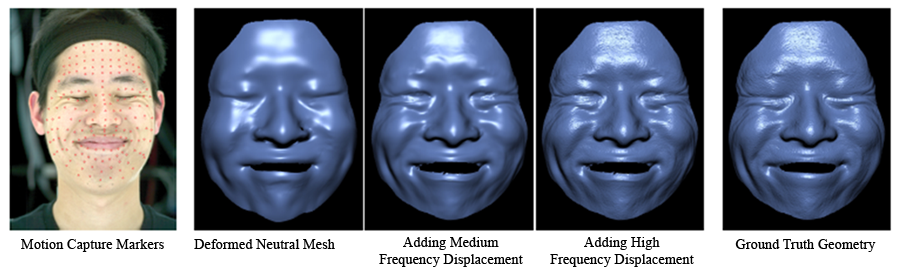

In this work, we introduce a novel automated method for modeling and synthesizing

facial performances with realistic dynamic wrinkles and fine scale facial details.

Our approach is to leverage a real-time 3D scanning system to record training data

of the high-resolution geometry and appearance of an actor performing a small set

of predetermined facial expressions. Additionally, a set of motion capture markers

is placed on the face to track large scale deformations. Next, we relate these

large scale deformations to the deformations at finer scales. We represent this

relation compactly in the form of two deformation-driven polynomial displacement

maps (PDMs), encoding variations in medium-scale and fine-scale displacements for

a face undergoing motion (bottom image).

We synthesize new high-resolution geometry and surface detail from sparse motion

capture markers using deformation-driven polynomial displacement maps; our results

agree well with high-resolution ground truth geometry of dynamic facial performances.