We present the first approach to volumetric performance capture and novel-view rendering at real-time speed from monocular video, eliminating the need for expensive multi-view systems or cumbersome pre-acquisition of a personalized template model. Our system reconstructs a fully textured 3D human from each frame by leveraging Pixel-Aligned Implicit Function (PIFu). While PIFu achieves high-resolution reconstruction in a memory-efficient manner, its computationally expensive inference prevents us from deploying such a system for real-time applications. To this end, we propose a novel hierarchical surface localization algorithm and a direct rendering method without explicitly extracting surface meshes. By culling unnecessary regions for evaluation in a coarse-to-fine manner, we successfully accelerate the reconstruction by two orders of magnitude from the baseline without compromising the quality. Furthermore, we introduce an Online Hard Example Mining (OHEM) technique that effectively suppresses failure modes due to the rare occurrence of challenging examples. We adaptively update the sampling probability of the training data based on the current reconstruction accuracy, which effectively alleviates reconstruction artifacts. Our experiments and evaluations demonstrate the robustness of our system to various challenging angles, illuminations, poses, and clothing styles. We also show that our approach compares favorably with the state-of-the-art monocular performance capture. Our proposed approach removes the need for multi-view studio settings and enables a consumer-accessible solution for volumetric capture.

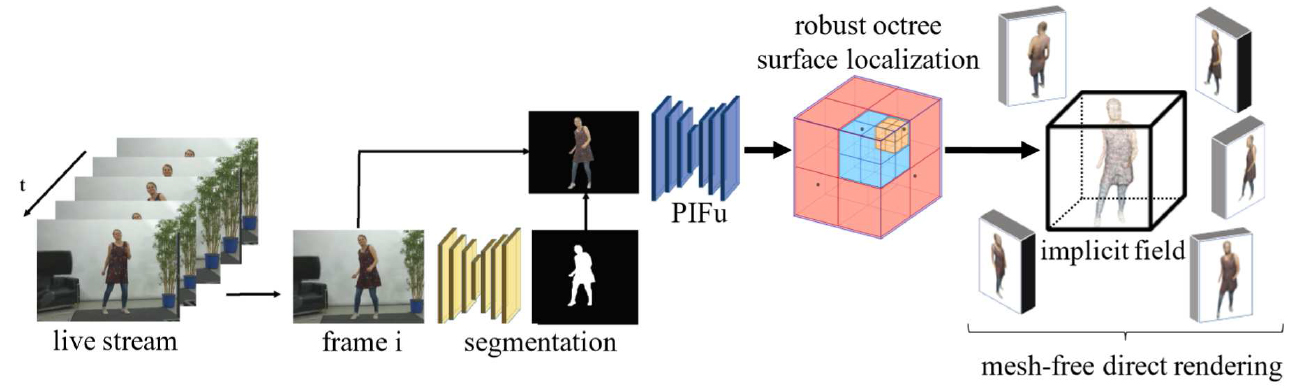

In this section, we describe the overall pipeline of our algorithm for real-time

volumetric capture (Fig. 2). Given a live stream of RGB images, our goal is to

obtain the complete 3D geometry of the performing subject in real-time with

the full textured surface, including unseen regions. To achieve an accessible

solution with minimal requirements, we process each frame independently, as

tracking-based solutions are prone to accumulating errors and sensitive to

initialization, causing drift and instability [49, 88]. Although recent approaches

have demonstrated that the use of anchor frames [3,10] can alleviate drift, ad-hoc

engineering is still required to handle common but extremely challenging scenarios

such as changing the subject.

For each frame, we first apply real-time segmentation of the subject from the

background. The segmented image is then fed into our enhanced Pixel-Aligned

Implicit Function (PIFu) [61] to predict continuous occupancy fields where the

underlining surface is defined as a 0.5-level set. Once the surface is determined,

texture inference on the surface geometry is also performed using PIFu, allowing

for rendering from any viewpoint for various applications. As this deep learning

framework with effective 3D shape representation is the core building block of

the proposed system, we review it in Sec. 3.1, describe our enhancements to it,

and point out the limitations on its surface inference and rendering speed. At

the heart of our system, we develop a novel acceleration framework that enables

real-time inference and rendering from novel viewpoints using PIFu (Sec. 3.2).

Furthermore, we further improve the robustness of the system by sampling hard

examples on the fly to efficiently suppress failure modes in a manner inspired by

Online Hard Example Mining [64] (Sec. 3.3).

To reduce the computation required for real-time performance capture, we

introduce two novel acceleration techniques. First, we present an efficient surface

localization algorithm that retains the accuracy of the brute-force reconstruction

with the same complexity as naive octree-based reconstruction algorithms.

Furthermore, since our final outputs are renderings from novel viewpoints, we

bypass the explicit mesh reconstruction stage by directly generating a novel-view

rendering from PIFu. By combining these two algorithms, we can successfully

render the performance from arbitrary viewpoints in real-time. We describe each

algorithm in detail below.

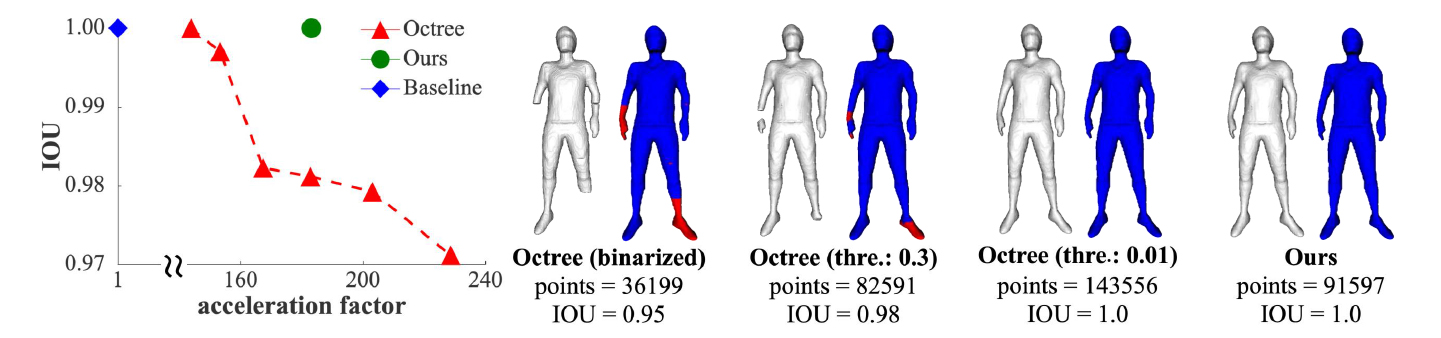

Octree-based Robust Surface Localization. The major bottleneck of the pipeline

is the evaluation of implicit functions represented by an MLP at an excessive

number of 3D locations. Thus, substantially reducing the number of points to

be evaluated would greatly increase the performance. The octree is a common

data representation for efficient shape reconstruction [87] which hierarchically

reduces the number of nodes in which to store data. To apply an octree for an

implicit surface parameterized by a neural network, recently [45] propose an

algorithm that subdivides grids only if it is adjacent to the boundary nodes

(i.e., the interface between inside node and outside node) after binarizing the

predicted occupancy value. We found that this approach often produces inaccurate

reconstructions compared to the surface reconstructed by the brute force baseline

(see Fig. 3). Since a predicted occupancy value is a continuous value in the range

[0, 1], indicating the confidence in and proximity to the surface, another approach

is to subdivide grids if the maximum absolute deviation of the neighbor coarse

grids is larger than a threshold. While this approach allows for control over the

trade-off between reconstruction accuracy and acceleration, we also found that

this algorithm either excessively evaluates unnecessary points to perform accurate

reconstruction or suffers from impaired reconstruction quality in exchange for

higher acceleration. To this end, we introduce a surface localization algorithm

that hierarchically and precisely determines the boundary nodes.

We train our networks using NVIDIA GV100s with 512 × 512 images. During

inference, we use a Logitech C920 webcam on a desktop system equipped with 62

GB RAM, a 6-core Intel i7-5930K processor, and 2 GV100s. One GPU performs

geometry and color inference, while the other performs surface reconstruction,

which can be done in parallel in an asynchronized manner when processing

multiple frames. The overall latency of our system is on average 0.25 second.

We evaluate our proposed algorithms on the RenderPeople [57] and BUFF

datasets [82], and on self-captured performances. In particular, as public datasets

of 3D clothed humans in motion are highly limited, we use the BUFF datasets [82]

for quantitative comparison and evaluation and report the average error measured

by the Chamfer distance and point-to-surface (P2S) distance from the prediction

to the ground truth. We provide implementation details, including the training

dataset and real-time segmentation module, in the appendix.

In Fig. 1, we demonstrate our real-time performance capture and rendering

from a single RGB camera. Because both the reconstructed geometry and texture

inference for unseen regions are plausible, we can obtain novel-view renderings in

real-time from a wide range of poses and clothing styles. We provide additional

results with various poses, illuminations, viewing angles, and clothing in the

appendix and supplemental video.