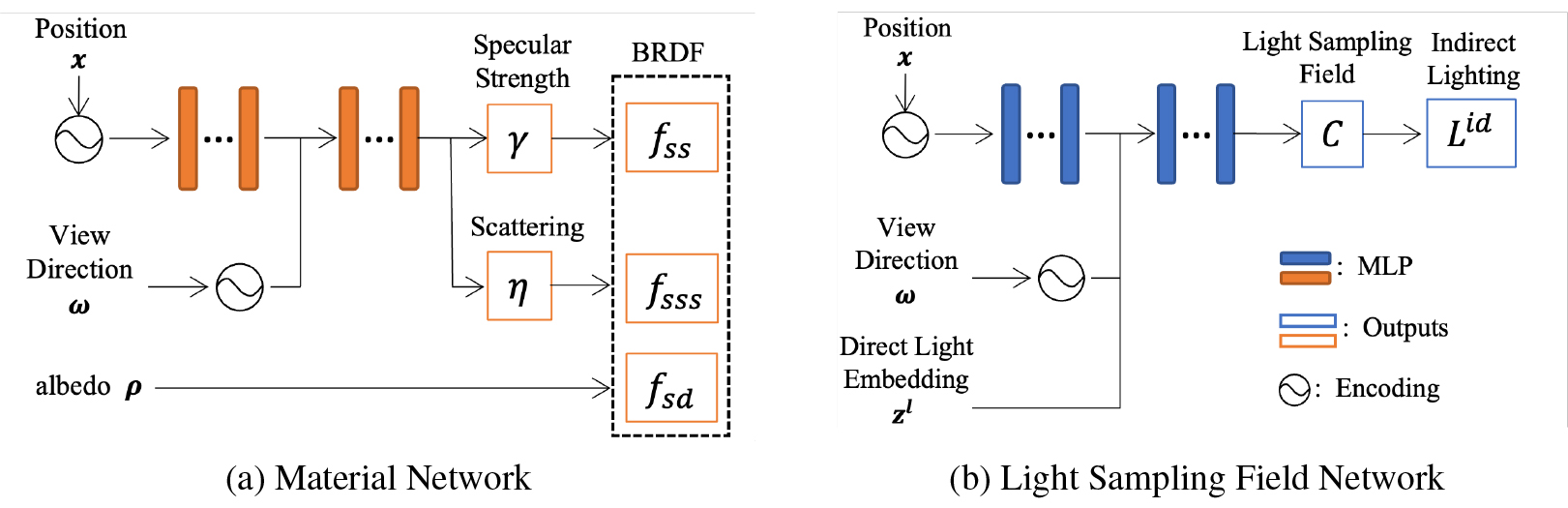

Physically-based rendering (PBR) is key for immersive rendering effects used widely in the industry to showcase detailed realistic scenes from computer graphics assets. A well-known caveat is that producing the same is computationally heavy and relies on complex capture devices. Inspired by the success in quality and efficiency of recent volumetric neural rendering, we want to develop a physically-based neural shader to eliminate device dependency and significantly boost performance. However, no existing lighting and material models in the current neural rendering approaches can accurately represent the comprehensive lighting models and BRDFs properties required by the PBR process. Thus, this paper proposes a novel lighting representation that models direct and indirect light locally through a light sampling strategy in a learned light sampling field. We also propose BRDF models to separately represent surface/subsurface scattering details to enable complex objects such as translucent material (i.e., skin, jade). We then implement our proposed representations with an end-to-end physically-based neural face skin shader, which takes a standard face asset (i.e., geometry, albedo map, and normal map) and an HDRI for illumination as inputs and generates a photo-realistic rendering as output. Extensive experiments showcase the quality and efficiency of our PBR face skin shader, indicating the effectiveness of our proposed lighting and material representations.

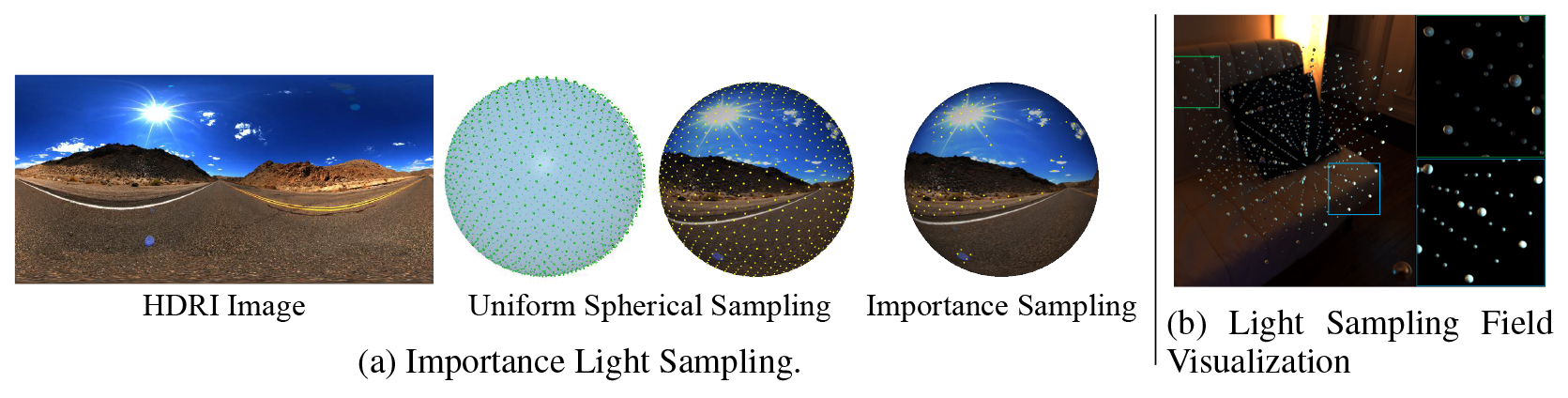

Direct radiance comes from the HDRI map directly. In order to compute the contribution of pixels in HDRI to different points in our radiance field, we use a SkyDome to represent direct illumination by projecting the HDRI environment map onto a sphere. Each pixel on the sphere is regarded as a distant directional light source. Hence, direct lighting is identical at all locations in the radiance field. Such representation preserves specular reflection usually achieved by ray tracing methods. We take two steps to construct the representation. Firstly, we uniformly sample a point grid of size N = 800 on the sphere, where each point is a light source candidate. Secondly, we apply our importance light sampling method to filter valid candidates by two thresholds: 1) an intensity threshold that clips the intensity of outliers with extreme values; 2) an importance threshold that filters out outliers in textureless regions. Fig. 2a illustrates this process.

Indirect illumination models the incoming lights reflected or emitted from surrounding objects, which is achieved by ray tracing with the assumption of limited bounce times in the traditional PBR pipeline. Inspired by the volumetric lightmaps used in Unreal Engine (Karis & Games, 2013), which stores precomputed lighting in sampled points and use them for interpolation at runtime for modeling indirect lighting of dynamic and moving objects, We adopt a continuous Light Sampling Field for accurately modeling the illumination variation in different scene positions. We use Spherical Harmonics (SH) to model the total incoming lights at each sampled location separately. We compute the local SH by multiplying fixed Laplace’s SH basis with predicted SH parameters. Specifically, we use SH of degree l = 1 for each color channel (RGB). Therefore, we acquire 3 (color channels) × 4 (basis) = 12-D vector as local SH representation. We downsample the HDRI map to 100 × 150 resolution and project it to a sphere. Each pixel on the map is considered an input lighting source. We use the direction and color of each pixel as the lighting embedding to feed into a light field sampling network for inference of coefficients of local SH. We visualize our Light Sampling Field with selected discrete sample points in Fig. 2b.

We demonstrate that the prior neural rendering representation for physically-based rendering fails to accurately model environment lighting or capture subsurface details. In this work, we propose a differentiable light sampling field network that models dynamic illumination and indirect lighting in a lightweight manner. In addition, we propose a flexible material network that models subsurface scattering for complicated materials such as the human face. Experiments on both synthetic and real-world datasets demonstrate that our light sampling field and material network collectively improve the rendering quality under complicated illumination compared with prior works. In the future, we will focus on modeling more complicated materials such as translucent materials and participated media. We will also collect datasets based on general objects and apply them for extensive tasks such as inverse rendering.