We propose a light-weight yet highly robust method for realtime human performance capture based on a single depth camera and sparse inertial measurement units (IMUs). Our method combines nonrigid surface tracking and volumetric fusion to simultaneously reconstruct challenging motions, detailed geometries and the inner human body of a clothed subject. The proposed hybrid motion tracking algorithm and efficient per-frame sensor calibration technique enable nonrigid surface reconstruction for fast motions and challenging poses with severe occlusions. Significant fusion artifacts are reduced using a new confidence measurement for our adaptive TSDF-based fusion. The above contributions are mutually beneficial in our reconstruction system, which enable practical human performance capture that is real-time, robust, low-cost and easy to deploy. Experiments show that extremely challenging performances and loop closure problems can be handled successfully.

The 3D acquisition of human performances has been a challenging topic for

decades due to the shape and deformation complexity of dynamic surfaces, especially

for clothed subjects. To ensure high-fidelity digitalization, sophisticated

multi-camera array systems [8, 4, 5, 44, 17, 24, 7, 14, 30] are preferred for professional

productions. TotalCapture [13], the state-of-the-art human performance

capture system, uses more than 500 cameras to minimize occlusions during

human-object interactions. Not only are these systems difficult to deploy and

costly, they also come with a significant amount of synchronization, calibration,

and data processing effort.

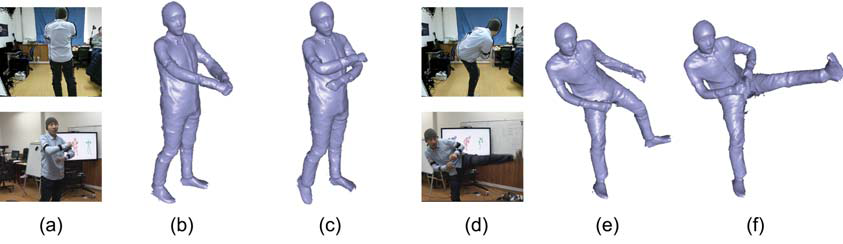

On the other end of the spectrum, the recent trend of using a single depth

camera for dynamic scene reconstruction [25, 12, 10, 32] provides a very convenient

and real-time approach for performance capture combined with online nonrigid

volumetric depth fusion. However, such monocular systems are limited to slow and

controlled motions. While improvement has been demonstrated lately

in systems like BodyFusion [45], DoubleFusion [46] and SobolevFusion [33], it is

still impossible to reconstruct occluded limb motions (Fig.1(b)) and ensure loop

closure during online reconstruction. For practical deployment, such as gaming,

where fast motion is expected and possibly interactions between multiple users,

it is necessary to ensure continuously reliable performance capture.

To read the publication, please click on link below.