Head-mounted cameras are an increasingly important tool for capturing facial performances to drive virtual characters. They provide a fixed, unoccluded view of the face, useful for observing motion capture dots or as input to video analysis. However, the 2D imagery captured with these systems is typically affected by ambient light and generally fails to record subtle 3D shape changes as the face performs. We have developed a system that augments a head-mounted camera with LED-based photometric stereo. The system allows observation of the face independent of the ambient light and records per-pixel surface normals allowing the performance to be recorded dynamically in 3D. The resulting date can be used for facial relighting or as better input to machine learning algorithms for driving an animated face.

Three individual lighting bandks encircle a convex mirror which is suspended approximatedly 20 centimeters from the performer's face. Each bank supports four near-infrared LEDs, keeping the illumination in the non-visible lighting spectrum to not disrupt the wearer's performance. Each grouping of lights is individually controlled, allowing us to repeat a sequence of three illumination patterns, followed by an unlit frame to record and subtract the environment's ambient light. A Point Grey Flea 3 camear captures the wide-angle view of the face reflected in the mirror. The camera is mounted on the side of the head to reduce wieghts. Crossed linear infrared polarizers are mounted over the lighting banks as well as the camera lens, allowing us to to attenutate the specular reflection from the face and remove LED glare. Together the camera and lights run at 120 fps, yielding all four lighitn conditions at 30fps. To correct subject motion, we compute optical flow between similar illumination patterns to temporally align each set of patterns.

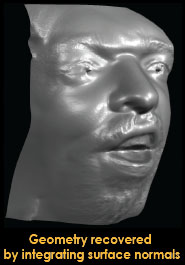

To estimate real-time 3D performance geometry, we combined GPU-based photometric

stereo and normal itegration. Our light sources are close to the face, violating

the photometric stereo assumption of distant illumination. To compensate, we solve

for per-pixel lighitng directions and intensities. We initialize lighting parameters

using a template face, then iteratively improve lighitng estimates based on the

integrated surface depth. We also iteratively correct depth

gradients to handle wide-angle lens distortion.

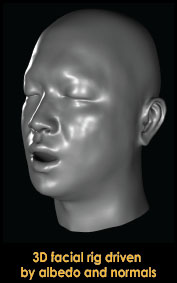

The results can be used as input to a machine learning algorithm to drive a facial

rig with the performance after an initial training phase. For an initial test, we

use an active appearnce model [Cootes et al. 2001] to find blendshape weights for

a given set of albedo and normal maps. Our initial results rae restricted to mouth

phonemes and we are working to extend this algorithm to animating the entire face.

Initial results show that analysis of normals and albedo provides smoother animation

than analysis of albedo alone. We are also working to minimize the wieight of the

system, which should not be significantly greater than existing rigs as the LEDs

weight just a few grams each.