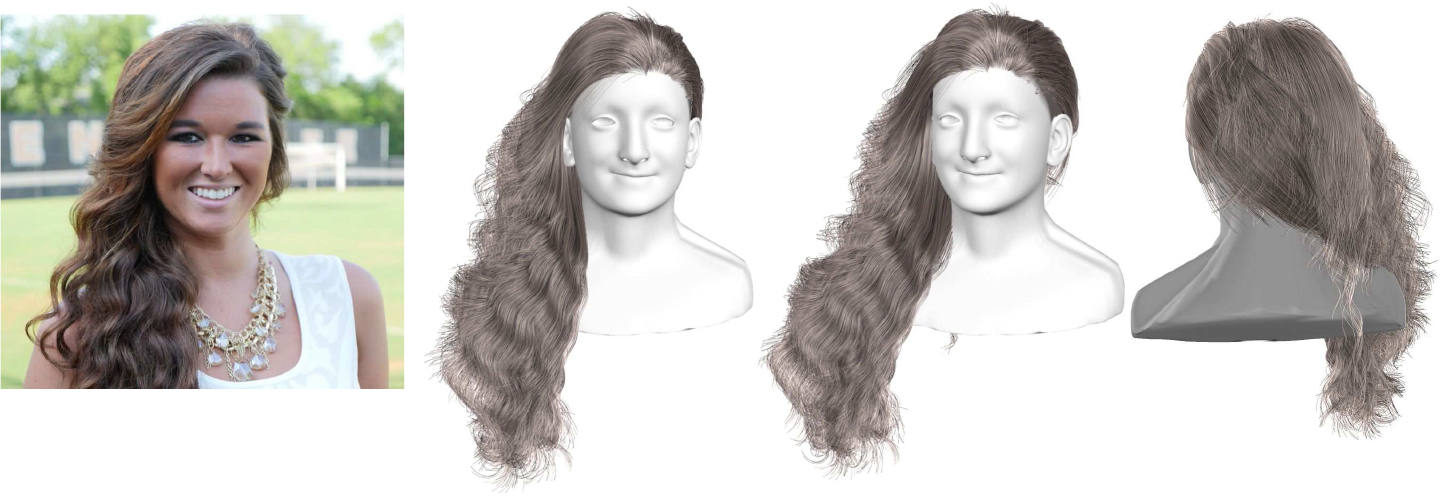

We introduce a deep learning-based method to generate full 3D hair geometry from an unconstrained image. Our method can recover local strand details and has real-time performance. State-of-the-art hair modeling techniques rely on large hairstyle collections for nearest neighbor retrieval and then perform ad-hoc refinement. Our deep learning approach, in contrast, is highly efficient in storage and can run 1000 times faster while generating hair with 30K strands. The convolutional neural network takes the 2D orientation field of a hair image as input and generates strand features that are evenly distributed on the parameterized 2D scalp. We introduce a collision loss to synthesize more plausible hairstyles, and the visibility of each strand is also used as a weight term to improve the reconstruction accuracy. The encoder-decoder architecture of our network naturally provides a compact and continuous representation for hairstyles, which allows us to interpolate naturally between hairstyles. We use a large set of rendered synthetic hair models to train our network. Our method scales to real images because an intermediate 2D orientation field, automatically calculated from the real image, factors out the difference between synthetic and real hairs. We demonstrate the effectiveness and robustness of our method on a wide range of challenging real Internet pictures, and show reconstructed hair sequences from videos.

Realistic hair modeling is one of the most difficult tasks when digitizing virtual humans [3, 20, 25, 27, 14]. In contrast to objects that are easily parameterizable, like the human face, hair spans a wide range of shape variations and can be highly complex due to its volumetric structure and level of deformability in each strand. Although [28, 22, 2, 26, 38] can create high-quality 3D hair models, but they require specialized hardware setups that are difficult to be deployed and populated. Chai et al. [5, 6] introduced the first simple hair modeling technique from a single image, but the process requires manual input and cannot properly generate non-visible parts of the hair. Hu et al. [18] later addressed this problem by introducing a data-driven approach, but some user strokes were still required. More recently, Chai et al. [4] adopted a convolutional neural network to segment the hair in the input image to fully automate the modeling process, and [41] proposed a four-view approach for more flexible control.

We have demonstrated the first deep convolutional neural network capable of performing real-time hair generation from a single-view image. By training an end-to-end network to directly generate the final hair strands, our method can capture more hair details and achieve higher accuracy than current state-of-the- art. The intermediate 2D orientation field as our network input provides flexibility, which enables our network to be used for various types of hair representa- tions, such as images, sketches and scans given proper preprocessing. By adopting a multi-scale decoding mechanism, our network could generate hairstyles of arbitrary resolution while maintaining a natural appearance. Thanks to the encoder-decoder architecture, our network provides a continuous hair represen- tation, from which plausible hairstyles could be smoothly sampled and interpolated.