While hair is an essential component of virtual humans,

it is also one of the most challenging digital assets to

create. Existing automatic techniques lack the generality

and flexibility to create rich hair variations, while

manual authoring interfaces often require considerable

artistic skills and e↵orts, especially for intricate 3D hair

structures that can be dicult to navigate. We propose

an interactive hair modeling system that can help create

complex hairstyles in minutes or hours that would otherwise

take much longer with existing tools. Modelers,

including novice users, can focus on the overall hairstyles

and local hair deformations, as our system intelligently

suggests the desired hair parts.

Our method combines

the flexibility of manual authoring and the convenience

of data-driven automation. Since hair contains intricate

3D structures such as buns, knots, and strands, they

are inherently challenging to create using traditional 2D

interfaces. Our system provides a new 3D hair authoring

interface for immersive interaction in virtual reality

(VR). Users can draw high-level guide strips, from which

our system predicts the most plausible hairstyles via a

deep neural network trained from a professionally curated

dataset.

Each hairstyle in our dataset is composed of

multiple variations, serving as blend-shapes to fit the user

drawings via global blending and local deformation. The

fitted hair models are visualized as interactive suggestions

that the user can select, modify, or ignore. We conducted

a user study to confirm that our system can significantly

reduce manual labor while improve the output quality

for modeling a variety of head and facial hairstyles that

are challenging to create via existing techniques.

|

|

|

|

|

|---|---|---|---|---|

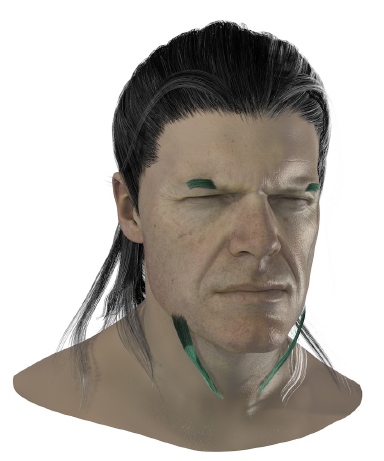

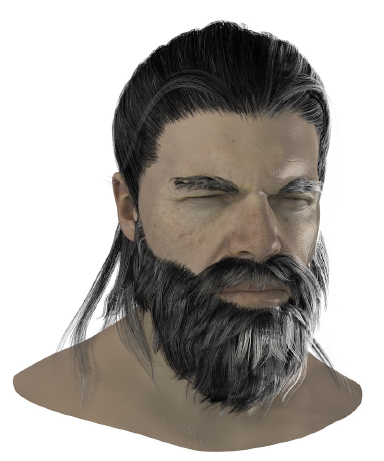

| (a) head hair gestures | (b) prediction | (c) facial hair gestures | (d) prediction | (e) result |

We represent our hair models as textured polygonal strips,

which are widely adopted in AAA real-time games such

as Final Fantasy 15 and Uncharted 4, and state-of-theart

real-time performance-driven CG characters such as

the “Siren” demo shown at GDC 2018 [35]. Hairstrips

are flexible to model and highly ecient to render [25],

suitable for authoring, animating, and rendering

multiple virtual characters. The resulting strip-based hair

models can be also converted to other formats such as

strands. In contrast, with strand-based models, barely

a single character could be rendered on a high-end machine,

and existing approaches for converting strands into

poly-strips tend to cause adverse rendering e↵ects due

to the lack of consideration of appearances and textures

during optimization.

To connect imprecise manual interactions with detailed

hairstyles, we design a deep neural network architecture

to classify sparse and varying number of input strokes

to a matching hairstyle in a database. We first ask

hair modeling artists to manually create a strip-based

hair database with diverse styles and structures. We

then expand our initial hair database using non-rigid

deformations so that the deformed hair models share the

same topology (e.g. ponytail) but vary in lengths and

shapes. To simulate realistic usage scenarios, we train the

network using varying numbers of sparse representative

strokes. To amplify the training power of the limited

data set and to enhance robustness of classification, the

network maps pairs instead of individual strips into a

latent feature space. The mapping stage has shared

parameter layers and max-pooling, ensuring our network

scales well to arbitrary numbers of user strokes.

We first resolve the strip-head collision. To accelerate the

computation, we pre-compute a dense volumetric levelset

field with respect to the scalp surface. For each grid

cell, its direction and distance to the scalp are also

precomputed and stored. During run time, samples inside

the head are detected and projected back to their nearest

points on the scalp, and samples outside the head remain

fixed.

With the calculated sample locations, we proceed to

reconstruct the full geometry of hair details. In particular,

we build the Bishop frame [5] for each strip, and use the

parallel transport method to calculate the transformation

at each sample point. The full output hair mesh could

be easily transformed via linear blending of these sample

transformations.

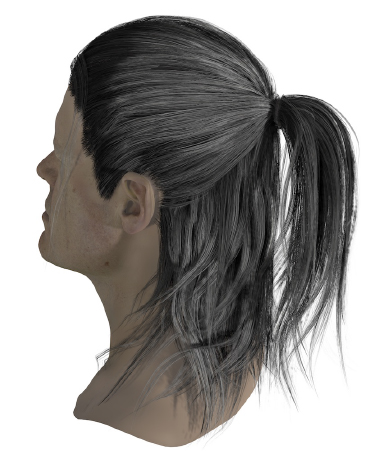

We show how our system can help author high-quality hair models with large variations. Figure 11 shows the modeling results created with very sparse set of guide strips. We first validate the performance of hairstyle prediction. As seen from the first and second column, our prediction network is capable of capturing high-level features of input strips, such as hair length and curliness. We then present the e↵ect of blending and deformation in fitting the hair models to the input key strips. Although the base model matches the query strips at a high level, details deviate from the user’s intentions. The proposed blending and deformation algorithm produces results with realistic local details better following input guide strokes.