Modeling realistic human characters is frequently done using 3D recordings of the shape

and appearance of real people across a set of different facial expressions [Pighin et al.

1998; Alexander et al. 2010] to build blendshape facial models. Believable characters

which cross the "Uncanny Valley" require high-quality geometry, texture maps, reflectance

properties, and surface detail at the level of skin pores and fine wrinkles. Unfortunately,

there has not yet been a technique for recording such datasets which is near-instantaneous

and relatively low-cost. While some facial capture techniques are instantaneous and inexpensive

[Beeler et al. 2010; Bradley et al. 2010], these do not generally provide lighting-independent

texture maps, specular reflectance information, or high-resolution surface normal detail for

relighting. In contrast, techniques which use multiple photographs from spherical lighting

setups [Weyrich et al. 2006; Ghosh et al. 2011] do capture such reflectance properties, but

this comes at the expense of longer capture times and complicated custom equipment.

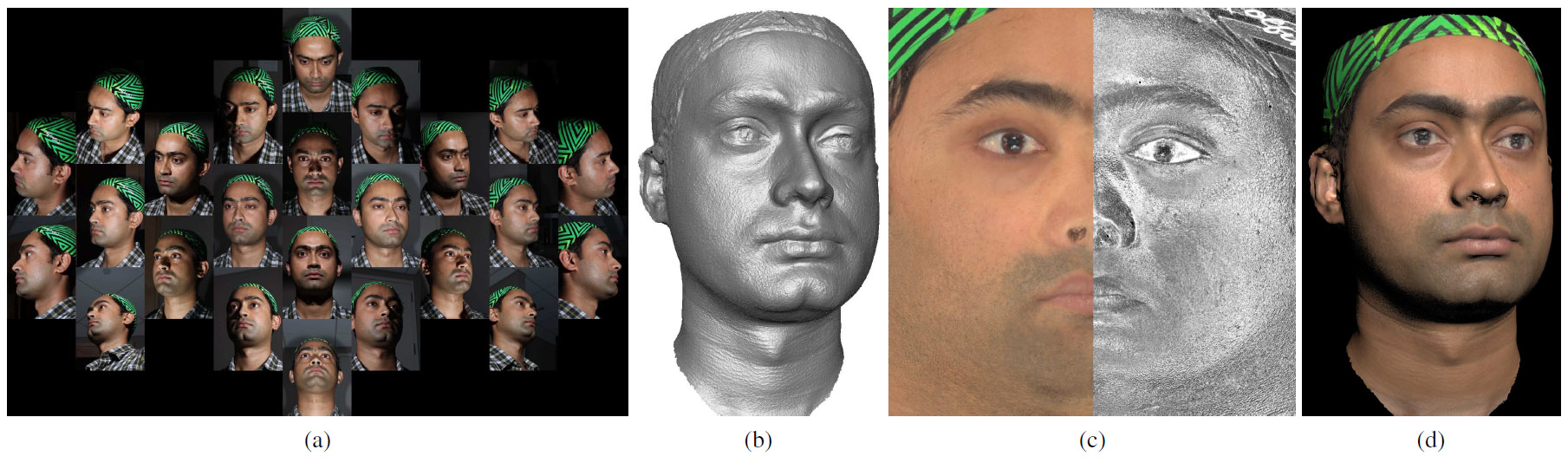

In this paper, we present a near-instant facial capture technique which records high-quality

facial geometry and reflectance using commodity hardware. We use a 24-camera DSLR photogrammetry

setup similar to common commercial systems1 and use six ring flash units to light the face.

However, instead of the usual process of firing all the flashes and cameras at once, each

flash is fired sequentially with a subset of the cameras, with the exposures packed milliseconds

apart for a total capture time of 66ms, which is faster than the blink reflex [Bixler et al. 1967].

This arrangement produces 24 independent specular reflection angles evenly distributed across

the face, allowing a shape-from-specularity approach to obtain high-frequency surface detail.

However, unlike other shapefrom- specularity techniques, our images are not taken from the

same viewpoint. Hence, we compute an initial estimate of the facial geometry using passive

stereo, and then refine the geometry using separated diffuse and specular photometric detail.

The resulting system produces accurate, high-resolution facial geometry and reflectance with

near-instant capture in a relatively low-cost setup.

The principal contributions of this work are:

Our capture setup is designed to record accurate 3D geometry with both diffuse and specular

reflectance information per pixel while minimizing cost and complexity and maximizing the

speed of capture. In all, we use 24 entry-level DSLR cameras and a set of six ring flashes

arranged on a gantry seen in Fig. 2.

Camera and Flash Arrangement The capture rig consists of 24 Canon EOS 600D entry-level

consumer DSLR cameras, which record RAW mode digital images at 5202 x 3565 pixel resolution.

Using consumer cameras instead of machine vision video cameras dramatically reduces cost,

as machine vision cameras of this resolution are very expensive and require high-bandwidth

connections to dedicated capture computers. But to keep the capture nearinstantaneous, we

can only capture a single image with each camera, as these entry-level cameras require at

least 1/4 second before taking a second photograph.

Since our processing algorithm determines fine-scale surface detail from specular reflections,

we wish to observe a specular highlight from the majority of the surface orientations of the

face. We tabulated the surface orientations for four scanned facial models and found, not

surprisingly, that over 90% of the orientations fell between ±90° horizontally and ±45°

vertically of straight forward (Fig. 3). Thus, we arrange the flashes and cameras to create

specular highlights for an even distribution of normal

directions within this space as seen in Fig. 4.

One way to achieve this distribution would be to place a ring flash on the lens of every camera and position the cameras over the ideal distribution of angles. Then, if each camera fires with its own ring flash, a specular highlight will be observed back in the direction of each camera. However, this requires shooting each camera with its own flash in succession, lengthening the capture process and requiring many flash units. Instead, we leverage the fact that position of a specular highlight depends not just on the lighting direction but also on the viewing direction, so that multiple cameras fired at once with a flash see different specular highlights according to the half-angles between the flash and the cameras. Using this fact, we arrange the 24 cameras and six diffused Sigma EM-140 ring flashes as seen in Fig. 5 to observe 24 specular highlights evenly distributed across the face. The colors indicate which cameras (solid circles) fire with which of the six flashes (dotted circles) to create observations of the specular highlights on surfaces (solid discs). For example, six cameras to the subject's left shoot with the "red" flash, four cameras shoot with the "green" flash, and a single camera shoots when the purple flash fires. In this arrangement, most of the cameras are not immediately adjacent to the flash they fire with, but they create specular reflections along a half-angle which does point toward a camera which is adjacent to the flash as shown in Fig. 6. The pattern of specular reflection angles observed can be seen on a blue plastic ball in Fig. 4. While the flashes themselves release their light in less than 1ms, the camera shutters can only synchronize to 1/200th of a second (5ms). When multiple cameras are fired along with a flash, a time window of 15ms is required since there is some variability in when the cameras take a photograph. In all, with the six flashes, four of which fire with multiple cameras, a total recording time of 66ms (1/15th sec) is achieved as in Fig. 5(b). By design, this is a shorter interval than the human blink reflex.

Implementation Details The one custom component in our system is a USB-programmable 80MHz Microchip PIC32 microcontroller for triggering the cameras via the remote shutter release input. The flashes are set to manual mode, full power, and are triggered by their corresponding cameras via the "hot shoe". The camera centers lie on a 1m radius sphere, framing the face using inexpensive Canon EF 50mm f/1.8 II lenses. A checkerboard calibration object is used to focus the cameras and to geometrically calibrate the camera's intrinsic, extrinsic, and distortion parameters, with reprojection errors of below a pixel. We also photograph an X-Rite ColorChecker Passport to calibrate the flash color and intensity. With the flash illumination, we can achieve a deep depth of field at an aperture of f/16 with the camera at its minimal gain of ISO 100 to provide well-focused images with minimal noise. While the cameras have built-in flashes, these could not used due to an Electronic Through-The-Lens (ETTL) metering process involving short bursts of light before the main flash. Our ring flashes are brighter and their locations are easily derived from the camera calibrations. By design, there is no flash in the subject's line of sight, and subjects reported no discomfort from the capture process.

Alternate Designs We considered other design elements for the system including cross- and parallel-polarized lights and flashes, polarizing beamsplitters for diffuse/specular separation, camera/flash arrangements exploiting Helmholtz reciprocity for stereo correspondence, or a floodlit lighting condition with diffuse light from everywhere as employed in passive capture systems. While these techniques offer specific advantages for reflectance component separation, robust stereo correspondence, and/or deriving a diffuse albedo map (from flood lit illumination) respectively, we did not use them since each would either require adding additional cameras and/or lights to the system for reflectance acquisition, or not achieve reflectance separation/ estimation when employing flood lighting for acquisition.

We employed our system to acquire a variety of subjects in differing facial expressions. In addition to Figure 1, Figure 9 shows the high-resolution geometry and several renderings under novel viewpoint and lighting conditions using our method. The recovered reflectance maps for one of the faces are shown in Fig. 10. Our acquisition system produces geometric quality which is competitive with more complex systems and reflectance maps not available from single-shot methods.