"It is absolutely awesome -- amazing. I'm one of the toughest critics of face capture, and even I have

to admit, these guys have nailed it. This is the first virtual human animated sequence that completely

bypasses all my subconscious warnings. I get the feeling of Emily as a person. All the subtlety is there.

This is no hype job, it's the real thing ... I officially pronounce that Image Metrics has finally built

a bridge across the Uncanny Valley and brought us to the other side."

-Peter Plantec, VFXWorld, August 07, 2008

Over the last few years our lab has been developing a new high-resolution realistic

face scanning process using our light stage systems, which we first published at the

2007 Eurographics Symposium on Rendering. In early 2008 we were approached by Image

Metrics about collaborating with them to create a realistic animated digital actor as a

demo for their booth at the approaching SIGGRAPH 2008 conference. Since we'd gotten pretty

good at scanning actors in different facial poses and Image Metrics has some really neat

facial animation technology, this seemed like a promising project to work on.

Image Metrics chose actress Emily O'Brien to be the star of the project. She plays Ms.

Jana Hawkes on "The Young and the Restless" and was nominated for a 2008 daytime Emmy

award. Emily came by our institute to get scanned in our Light Stage 5 device on the

afternoon of March 24, 2008. The image to the left shows Emily in the light stage

during a scan, with all 156 of its white LED lights turned on.

Our previous light stage processes used to capture digital actors for films such as

Spider Man 2, King Kong, Superman Returns, Spider Man 3, and Hancock captured hundreds

of images of the actor's face from every lighting direction one at a time. This allowed

for very accurate facial reflectance to be recorded and simulated, though it required

high-end motion picture cameras, involved capturing a great deal of data, and required

a custom face rendering system based on our SIGGRAPH 2000 paper. Nonetheless, studios

such as Sony Pictures Imageworks achieved some notable virtual actor results

using these techniques.

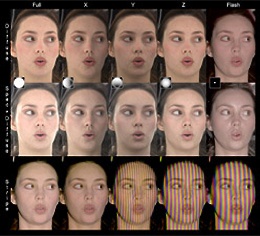

Our most recent process requires only about fifteen photographs of the face under

different lighting conditions as seen to the right to capture the geometry and

reflectance of a face. The photos are taken from a stereo pair of off-the-shelf

digital still cameras, and a small enough number of images is required, everything

can be captured quickly in "burst mode" in under three seconds before the images

even need to be written to the compact flash cards.

Most of the images are shot with essentially every light in the light stage turned

on, but with different gradations of brightness. All of the light stage lights have

linear polarizer film placed on top of them, affixed in a particular pattern of

orientations, which lets us measure the specular and subsurface reflectance

components of the face independently by changing the orientation of a polarizer

on the camera.

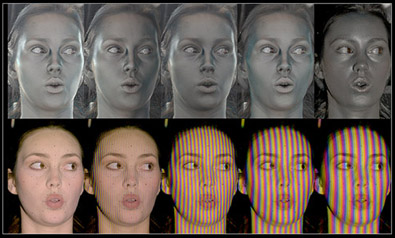

The top two rows show Emily's face under four spherical gradient illumination

conditions and then a point-light condition, and all of these top images are

cross-polarized to eliminate the shine from the surface of her skin (her specular

component). What's left is the skin-colored "subsurface" reflection, often called

the "diffuse" component: this is light which scatters within the skin enough to

become depolarized before re-emerging. The right image is lit by a frontal

point-light, also cross-polarizing the specular reflection.

The middle row shows parallel-polarized images of the face, where the polarizer

on the camera is rotated so that the specular reflection returns, and in double

strength compared to the subsurface reflection. We can then see the specular

reflection on its own by subtracting the first row of images from the second row.

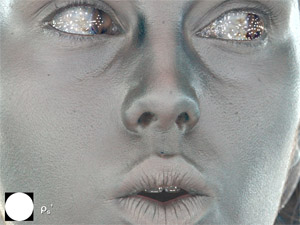

Here is a closeup of the "diffuse-all" image of Emily. Every light in the light

stage is turned on to equal intensity, and the polarizer on the camera is

oriented to block the specular reflection from every single one of the polarized

LED light sources. Even the highlights of the lights in Emily's eyes are eliminated.

This is about as flat-lit an image of a person's face as you could possibly

photograph. And it's almost the perfect image to use as the diffuse texture

map for the face if you're building a virtual character. The one problem is

that its polluted to some extent by self-shadowing and interreflections, making

the concavities around the eyes, under the nose, and between the lips somewhat

darker and slightly more color-saturated than they should be. Depending on how

you're doing your renderings, this is either a bug or a feature. For real-time

rendering, it can actually add to the realism if this effect of "ambient occlusion"

is effectively alreaddy "baked in". If new lighting is being simulated on the face

using a global illumination technique, then it doesn't make sense to calculate new

self-shadowing to modify a texture map that already has self-shadowing present. In

this case, you can use the actor's 3D geometry to compute an approximation to the

effects of self-shadowing and/or interreflections, and then divide these effects

out of the texture image.

This image also shows the makeup dots we put on Emily's face which help us to align

the images in the event there is any drift in her position or expression over the

fifteen images; they are relatively easy to remove digitally. Emily was extremely

good at staying still for the three-second scans and many of her datasets required

no motion compensation at all. We have already had some success at acquiring this

sort of data in real time using high-speed video [Ma et al. 2008].

This image of Emily is also lit by all of the light stage lights, but the orientation

of the polarizer has been turned 90 degrees which allows the specular reflections to

return. You can see a sheen of , and the reflections of the lights are now evident in

her eyes. In fact, the specular reflection is seen at double the strength of the

subsurface (diffuse) reflection, since the polarizer on the camera blocks about half

of the unpolarized subsurface reflection.

This image shows the combined effect of specular reflection and subsurface reflection;

to model the facial reflectance we would really like to observe the specular reflection

all on its own. To do this, we can simply subtract the diffuse-only image from this one.

Taking the difference between the diffuse-only image and the diffuse-plus-specular

image yields this image of just the specular reflection of the face. The image is

essentially colorless since this light has reflected specularly off the surface of

the skin, rather than entering the skin and having its blue and green colors significantly

absorbed by skin pigments and blood before reflecting back out.

This image provides a useful starting point for building a digital character's specular

intensity map, or "spec map". Essentially, it shows for each pixel the intensity of the

specular reflection at that pixel. However, the specular reflection becomes amplified

near grazing angles such as at the sides of the face due to the denominator of Fresnel's

equations; we generally model and compensated for this effect using Fresnel's equations

but also tend to ignore regions of the face at extreme grazing angles. The image also

includes some of the effects of "reflection occlusion." The sides of the nose and

innermost contour of the lips appear to have no specular reflection since self-shadowing

prevents the lights from reflecting in these angles.

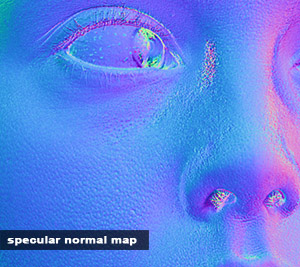

Some of our lab's most recent work [Ghosh et al. 2008] has shown that this sort of

polarization difference image also contains effects of single scattering, where the

light enters the skin but scatters exactly once off some element of the skin before

reflecting to the camera. This light picks up some of the skin's melanin color,

adding a little color to the image. However, the image is dominated by the specular

component, which will allow us to reconstruct high-resolution facial geometry.

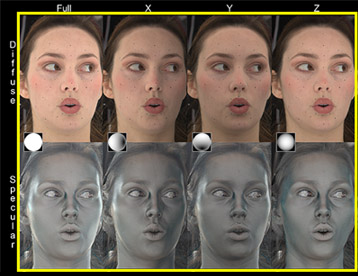

Going back to the full set of Emily images, we have subtracted the entire first row

from the entire second row to produce a set of specular-only images of the face under

different illumination conditions. The images of the face under the gradient illumination

conditions will allow us to compute surface orientations per pixel.

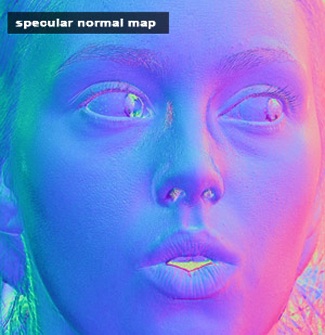

Computing the vector halfway between the reflection vector and the view vector yields a surface normal estimate for the face based on the specular reflection. Here we see the face's normal map visualized in the standard RGB = XYZ color map. The normal map contains detail at the level of skin pores and fine wrinkles.

The four images of the specular reflection under the gradient illumination patterns let us derive a high-resolution normal map for the face. If we look at one pixel across this four-image sequence, its brightness in the X, Y, and Z images divided by its brightness in the fully-illuminated image uniquely encodes the direction of the light stage reflected in that pixel. This tells us the reflection vector for the pixel, and from the camera calibration we also know the view vector.

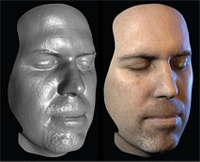

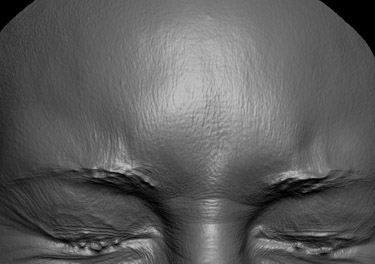

LOW-RES GEOMETRY

The last set of images in the scanning process are a set of color fringe patterns

which let us robustly form pixel correspondences between the left and right viewpoints

of the face. From these correspondences and the camera calibration, we can triangulate

a 3D triangle mesh of Emily's face. However, these images of the face show the subsurface

facial reflectance, which originates beneath the surface of the skin and blurs the

incident illumination. As a result, the geometry is relatively smooth and misses the

skin texture detail that we would like to see in our scans.

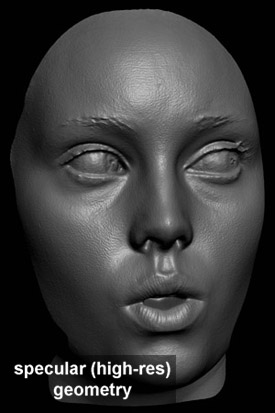

We add in the skin texture detail by essentially embossing the specular normal map onto the 3D mesh.

HIGH-RES GEOMETRY

By doing this, a high-resolution version of the mesh is created and the vertices of

each triangle are allowed to move forward and back until they best exhibit the same

surface normals as the normal map. Our lab first described this process on the web

in some work involving Light Stage 2 back in 2001, though back then we were using

normal maps built from the diffuse facial reflection observed in traditional light

stage data. The result is a very high-resolution 3D scan, with different skins

textures clearly observable in different areas of the face.

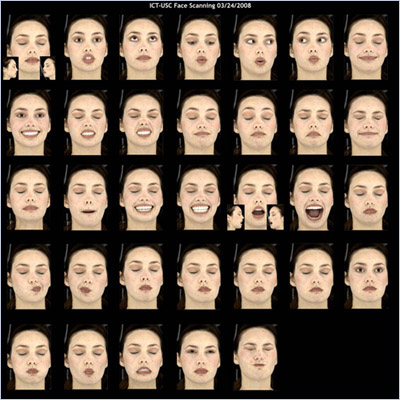

Image Metrics planned out thirty-three facial expressions for us to capture Emily in,

based loosely on Paul Ekman's Facial Action Coding System.

There are a lot of things going on with her mouth and a number of things happening with her

eyes - Emily did a great job staying still for all of them. Two of the scans - one with eyes closed

and one with eyes open - were acquired from the two sides of the face as well as

from the front, as seen in the insets. This allowed us to merge together a 3D model

of the face covering from ear to ear.

Building a digital actor from scans of multiple facial expressions is itself a commonly

practiced technique - we used it ourselves in 2004 when we scanned actress Jessica Vallot

in about 40 facial expressions for our

Animated Facial Reflectance Fields project, and

going further back, ILM acquired multiple 3D scans of actress Mary Elizabeth Mastrantonio

to create the animated water creature in The Abyss.

Left:

This particular scan of Emily shows a variety of skin textures on her forehead, cheeks,

nose, lips, and chin. If you click the image, the textures and their variety become even more

evident on the rendering of the 3D geometry.

Right:

The fourteen images circulating to the left show a sampling of the high-resolution scans

taken of Emily in different facial expressions. A lot goes on in a face as it moves!

The first scan above (A) shows Emily pulling her mouth to one side, and an interesting

pattern of skin buckling develops across the top of her lip. This kind of dynamic

behavior would take an especially talented digital artist to model realistically.

Just as dramatic an effect is the stretching of the skin texture on her cheek. The skin

pores greatly elongate and become shallower, looking almost nothing like the skin pore

texture observed for the same cheek in the neutral scan. This was a skin phenomenon we

hadn't observed before, and one that should enhance the realism of virtual characters

if it can be reproduced faithfully in a digital character.

Emily's skin pore detail in the neutral scan (B), showing no skin pore elongation - a

qualitatively different appearance than the stretched cheek texture in the previous image.

The last scan above (C) has some interesting skin detail as well. Emily was asked to

raise her eyebrows, streaching her eyelids over her eyes. The fraction-of-a-millimeter

resolution of the scan allowed us to make out the fine capillaries under her eyelid.

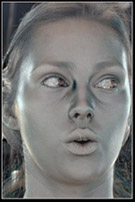

Left: A significant benefit of the photographically-based face scanning process is that we

capture perfectly aligned texture maps in addition to the high-resolution 3D geometry. We can in fact

do more than visualize Emily's scans as grey-shaded models.

Right: Here is a scan with the diffuse texture maps applied using a lambert material.

There is no advanced skin shading or global illumination being performed, so the renderings look

chalky and notably unlike skin.

In addition to the diffuse texture map, our scanning process also provides the specular intensity map and a set of normal maps. Part of the specular normal map which we saw earlier is shown to the left.

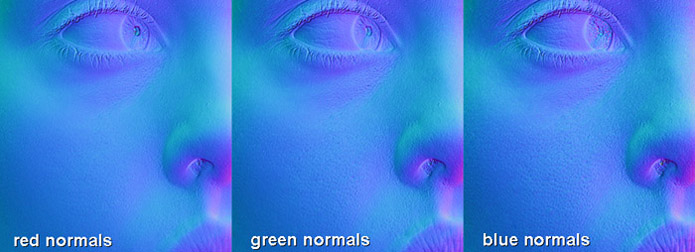

As it turns out, we can also estimate normals in a similar manner from any one of the color

channels of the diffuse reflection of the face as seen above. Since these normal maps are

calculated from light which has scattered beneath the surface of the skin, they blur the

surface detail compared to the specular normal map. The red channel has the most blue since

red light can scatters the furthest within skin, while the blue channel preserves the most

detail, but still far less than the specular normal map.

The fact that the diffuse normal maps blur skin detail can be a useful feature rather than

a shortcoming, since they essentially measure the 1st-order response of the skin to illumination.

In particular we can use all of these normal maps to realistically render the face with a

real-time, local shading model called hybrid normal rendering, presented in

[Ma et al. 2007].

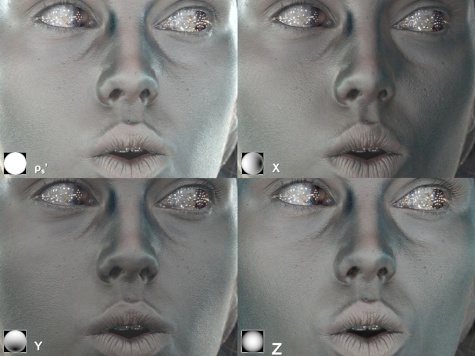

With hybrid normal rendering, we render the diffuse component of the skin as three different

Lambertian cosine lobes, one for each color channel, each driven by the corresponding diffuse

normal map and modulated by the diffuse color map. In addition, we render the shine of the

skin as a specular lobe driven by the specular normal map and modulated by the specular

intensity map. The renderings above use an implementation of hybrid normal map rendering

in Maya 8.0, and the technique is almost trivial to implement in a real-time pixel shader.

The hybrid normals rendering produces a believeable "skin-like" quality in the renderings,

and encodes some of the photometric effects of self-shadowing and interrefelcted light as well.

The technique won't produce light bleeding into sharp shadows - that would require subsurface

scattering simulation -- but it seems appropriate for most common lighting environments.

Some of our most recent work [Ghosh et al. 2008] also shows how to obtain a per-region specular

roughness map and use that for rendering as well.

We did one more piece of 3D scanning for the Emily project: a plaster cast of Emily's teeth provided by Image Metrics, adapting our 3D scanning techniques to work with greater accuracy in a smaller scanning volume. Here is a photo of the cast on the left and a rendering of Emily's digital teeth model on the right.

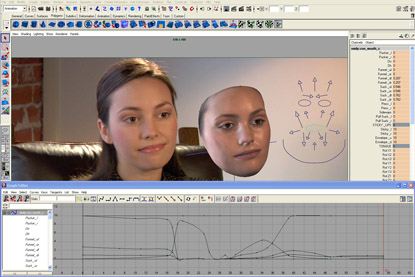

The Image Metrics team took the set of our high-resolution face scans and within the course of a few months created a fully rigged, animatable face model for Emily. At the first demo we visited, just a couple of months after delivering our scans, it was amazing to see someone manipulate the animation controls and have the digital Emily's facial rig move into facial positions completely consistent with the scans we had provided. This was no small feat - it required adding digital eyes, rigging the skin around the eyes, adding the teeth, and creating a rig that not only replicated the scans faithfully but also did reasonable things for the infinite variety of intermediate positions Emily's face could produce - especially while speaking!

DOWNLOAD High Quality: Digital Emily Project, 1280x720, (82.1MB).

Image Metrics also tracked Emily's face in the master shot, set up a shader to render Emily's face using subsurface scattering (hybrid normal rendering would be cool to try next time), and replicated the studio lighting environment using an HDR light probe image of the set and image-based lighting. They then replaced the real Emily's face with a 100% digital Emily face driven by her facial performance, frame for frame, rotoscoping her fingers as necessary when she moved her hands in front of her face. Emily's facial performance was not an easy one to match, with a variety of subtle and extreme expressions and emotions. Nonetheless, the result was a realistic live action version of the digital Emily character which many people found to be entirely convincing, even after several viewings.

DOWNLOAD High Quality: Making of Digital Emily Project 960x540, (110MB)

The offical "making-of" video clip from Image Metrics which ran at their SIGGRAPH 2008 booth can be seen to the left, including interviews with a number of the people on the project.

Image Metrics

Director/Rigger

Oleg Alexander

Computer Vision Engineer

Dr. Mike Rogers

Produced By

David Barton

Production Assistant

Edwin Gamez

Render Artist

William Lambeth

Matchmover

Steven McClellan

Facial Animation

Cesar Bravo, Matt Onheiber

Additional Modeling

Tom Tran

Actress

Emily O'Brien

Marketing

Eric Schumacher

Co-Producer

Christopher Jones

PR

Shannon McPhee

Production Manager

Peter Busch

Director of Photography

Justin Talley

Performance Analysis

Bryan Burger

Dental Cast

Sean Kennedy

Music

Confidence Head

USC - Institute for Creative Technologies

Senior Supervisor

Paul Debevec

Research Programmer

Matt Chiang

Research Programmer

Alex Ma

Supervising Researcher

Tim Hawkins

Producer

Tom Pereira

3D Scanning

Andrew Jones

Reflectance Modeling

Abhijeet Ghosh

Technical Artist

Jay Busch