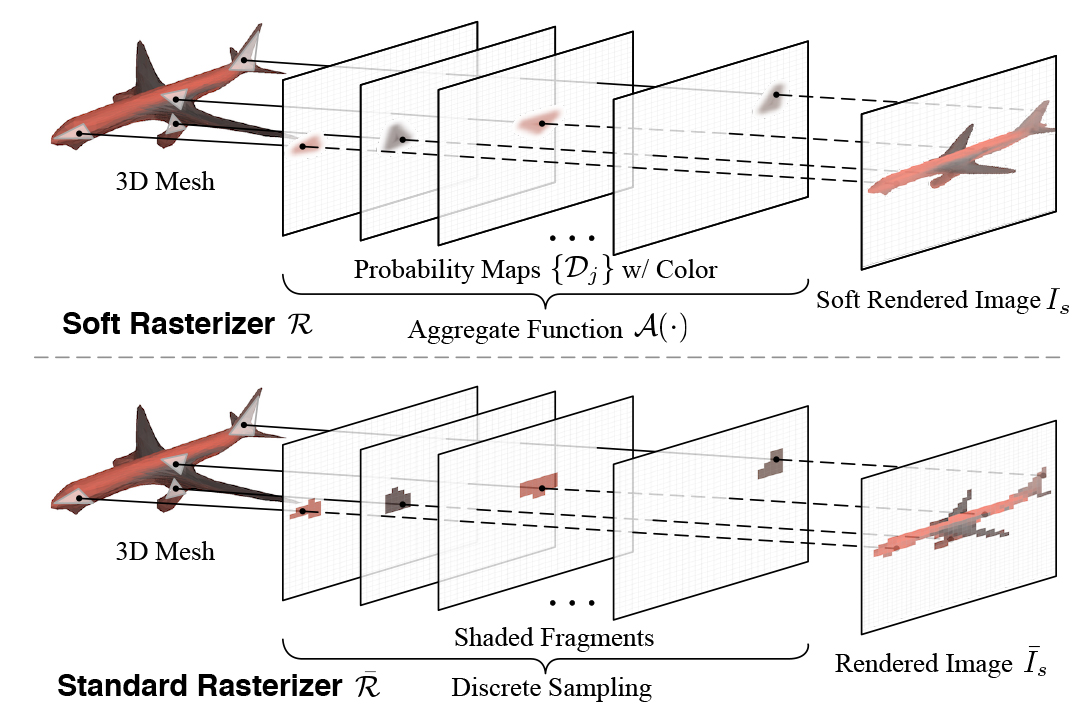

Rendering bridges the gap between 2D vision and 3D scenes by simulating the physical process of image formation. By inverting such renderer, one can think of a learning approach to infer 3D information from 2D images. However, standard graphics renderers involve a fundamental step called rasterization, which prevents rendering to be differentiable. Unlike the state-of-the-art differentiable renderers [25], [35], which only approximate the rendering gradient in the backpropagation, we propose a natually differentiable rendering framework that is able to (1) directly render colorized mesh using differentiable functions and (2) back-propagate efficient supervisions to mesh vertices and their attributes from various forms of image representations. The key to our framework is a novel formulation that views rendering as an aggregation function that fuses the probabilistic contributions of all mesh triangles with respect to the rendered pixels. Such formulation enables our framework to flow gradients to the occluded and distant vertices, which cannot be achieved by the previous state-of-the-arts. We show that by using the proposed renderer, one can achieve significant improvement in 3D unsupervised single-view reconstruction both qualitatively and quantitatively. Experiments also demonstrate that our approach can handle the challenging tasks in image-based shape fitting, which remain nontrivial to existing differentiable renders

2D images are widely used as the media for reasoning about 3D properties. In particular, imagebased reconstruction has received the most attention. Conventional approaches mainly leverage the stereo correspondence based on the multi-view geometry [12], [17] but are restricted to the coverage provided by the multiple views. With the availability of largescale 3D shape dataset [7], learning-based approaches [16], [19], [58] are able to consider single or few images thanks to the shape prior learned from the data. To simplify the learning problem, recent works reconstruct 3D shape via predicting intermediate 2.5D representations, such as depth map [31], image collections [24], displacement map [20] or normal map [49], [59]. Pose estimation is another key task to understanding the visual environment. For 3D rigid pose estimation, while early approaches attempt to cast it as classification problem [56], recent approaches [26], [61] can directly regress the 6D pose by using deep neural networks. Estimating the pose of non-rigid objects, e.g. human face or body, is more challenging. By detecting the 2D key points, great progress has been made to estimate the 2D poses [5], [38], [60]. To obtain 3D pose, shape priors [2], [34] have been incorporated to minimize the shape fitting errors in recent approaches [3], [4], [5], [23]. Our proposed differentiable renderer can provide dense rendering supervision to 3D properties, benefitting a variety of image-based 3D reasoning tasks.

Image-based 3D reconstruction plays a key role in a variety of

tasks in computer vision and computer graphics, such as scene

understanding, VR/AR, autonomous driving, etc. Reconstructing

3D objects either in mesh [47], [58] or voxel [62] representation

from a single RGB image has been actively studied thanks to

the advent of deep learning technologies. While most approaches

on mesh reconstruction rely on supervised learning, methods

working on voxel representation have strived to leverage rendering

loss [8], [28], [57] to mitigate the lack of 3D data. However,

the reconstruction quality of voxel-based approaches are limited

primarily due to the high computational expense and its discrete

nature. Nonetheless, unlike voxels, which can be easily rendered

via differentiable projection, rendering a mesh in a differentiable

fashion is non-trivial as discussed in the previous context. By

introducing a naturally differentiable mesh renderer, SoftRas combines

the merits of both worlds – the ability to harness abundant

resources of multi-view images and the high reconstruction quality

of mesh representation.

To demonstrate the effectiveness of soft rasterizer, we fix the

extrinsic variables and evaluate its performance on single-view

3D reconstruction by incorporating it with a mesh generator. The

direct gradient from image pixels to shape and color generators

enables us to achieve 3D unsupervised mesh reconstruction. Our

framework is demonstrated in Figure 6. Given an input image, our

shape and color generators generate a triangle mesh M and its

corresponding colors C, which are then fed into the soft rasterizer.

The SoftRas layer renders both the silhouette Is and color image

Ic and provide rendering-based error signal by comparing with

the ground truths. Inspired by the latest advances in mesh learning

[25], [58], we leverage a similar idea of synthesizing 3D model

by deforming a template mesh. To validate the performance of

soft rasterizer, the shape generator employ an encoder-decoder

architecture identical to that of [25], [62]. The details of the shape

and generators are described in the supplemental materials.

Our proposed SoftRas can directly render a given mesh using differentiable functions, while previous rasterization-based differentiable renderers [25], [35] have to rely the off-the-shelf renders for forward rendering. In addition, compared to standard graphics renderer, SoftRas can achieve different rendering effects in a continuous manner thanks to its probabilistic formulation. By increasing σ, the key parameter that controls the sharpness of the screen-space probability distribution, we are able to generate more blurry rendering results. Furthermore, with increased , one can assign more weights to the triangles on the far end, naturally achieving more transparency in the rendered image. We demonstrate rendering effects in the supplemental materials. We will show in Section 5.3 that the blurring and transparent effects are the key to reshaping the energy landscape in order to avoid local minima.