We present a system for capturing and rendering life-size 3D human subjects on an

automultiscopic display. Automultiscopic 3D displays allow a large number of viewers

to experience 3D content simultaneously without the hassle of special glasses or

head gear. Such displays are ideal for human subjects as they allow for natural

personal interactions with 3D cues such as eye-gaze and complex hand gestures.

In this talk, we will focus on a case-study where our system was used to digitize

television host Morgan Spurlock for his documentary show "Inside Man" on CNN.

Automultiscopic displays work by generating many simultaneous views with

highangular density over a wide-field of view. The angular spacing between

between views must be small enough that each eye perceives a distinct and

different view. As the user moves around the display, the eye smoothly transitions

from one view to the next. We generate multiple views using a dense horizontal

array of video projectors. As video projectors continue to shrink in size, power

consumption, and cost, it is now possible to closely stack hundreds of projectors

so that their lenses are almost continuous. However this display presents a new

challenge for content acquisition. It would require hundreds of cameras to directly

measure every projector ray. We achieve similar quality with a new view interpolation

algorithm suitable for dense automultiscopic displays.

Our interpolation algorithm builds on Einarsson et al. [2006] who used optical

flow to resample a sparse light field. While Einarsson et al. was limited to cyclical

motions using a rotating turntable, we use an array of 30 unsynchronized Panasonic

X900MK 60p consumer cameras spaced over 180 degrees to capture unconstrained motion.

We first synchronize our videos within 1/120 of a second by aligning their

corresponding sound waveforms. We compute pair-wise spatial flow correspondences

between cameras using GPU optical flow. As each camera pair is processed independently,

the pipeline can be highly parallelized. As a result, we achieve much shorter

processing times than traditional multi-camera stereo reconstructions. Our view

interpolation algorithm maps images directly from the original video sequences to

all the projectors in realtime, and could easily scale to handle additional cameras

or projectors. For the "Inside Man" documentary we recorded a 54 minute interview

with Morgan Spurlock, and processed 7 minutes of 3D video for the final show.

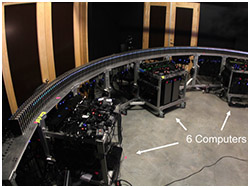

Our projector array consists of 216 video projectors mounted in a semi-circle

with a 3.4m radius. We have a narrow 0:625 º spacing between projectors which

provides a large display depth of field with minimal aliasing. We use LED-powered

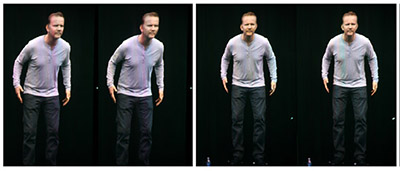

Qumi v3 projectors in a portrait orientation (Fig. 2). At this distance the

projected pixels fill a 2m tall anisotropic screen with a life-size human body

(Fig. 1). The screen material consists of a vertically-anisotropic light shaping

diffuser manufactured by Luiminit Co. The material scatters light vertically

(60 º) so that each pixel can be seen at multiple viewing heights and while

maintaining a narrow horizontal blur (1 º) to smoothly fill in the gaps

between the projectors with adjacent pixels. More details on the screen

material can be found in Jones et al. [2014]. We use six computers to render

the projector images. Each computer contains two ATI Eyefinity 7800 graphics

cards with 12 total video outputs. Each video signal is then divided three

ways using a Matrox TripleHead-to-Go video HDMI splitter.

In the future, we plan on capturing longer format interviews and other

dynamic performances. We are working to incorporate natural language processing

to allow for true interactive conversations with realistic 3D humans.

How do you create the 3D Morgan Spurlock?

We conducted an hour long interview in Light Stage 6

The stage provided flat even illumination from all directions with over 6,000 white LEDs. Fifty high-definition

Panasonic video cameras simultaneously recorded Morgan's performance. Each camera recorded a different

perspective of Morgan over 180 degrees. The cameras were closely spaced so that we could later generate

any intermediate view, which would ultimately enable us to project Morgan's digital self from all

directions on the 3D display.

Is this a hologram?

In popular culture, holograms tend to be any display that shows a floating 3D image without

the need for 3D glasses. True holography, however, refers to a specific process: recording a

3D scene with coherent laser light and recording the full waveform reflected off a scene on a

photographic plate. Our display is not a true hologram because it only recreates varying rays

of light. However, it is a true 3D display. In technical terms, what we have is an

"automultiscopic multiview display", aptly named because it exhibits multiple 3D images

in different directions without the need for stereo glasses.

How does Morgan appear 3D?

The 3D Morgan Spurlock is displayed on a dense array of 216 Qumi video projectors mounted

in a semicircle behind a flat anisotropic screen. Each projector generates a slightly different

rendering of Morgan Spurlock based on the recorded video. As the viewer moves around the screen,

she or he sees a varying 3D image composed of pixels from the subset of projectors behind the

screen. The perspective for each position matches what the view would be if Morgan was actually

standing in front of the viewer in the shared space.

How do you control so many projectors at once?

The rendering process is distributed across multiple computers with multiple graphics cards.

Matrox video splitters allow each computer to send signals to 36 projectors.

What material is the screen made out of?

The screen is a vertical anisotropic light-shaping diffuser. Unlike a conventional

Lambertian screen, which scatters light in all directions, our screen scatters light from

each projector as a narrow vertical stripe while preserving the varying angular distribution

of rays from projectors. Each point on the screen can display different colors in different

horizontal directions corresponding to the different projectors behind the screen.

Have you built any other projector arrays?

We've built a smaller projector array designed to show just a human head using 72

Texas Instruments pico projectors to illuminate a 30cm screen. We demonstrated this

system at SIGGRAPH 2013 Emerging Technologies and presented a paper at SPIE Stereoscopic

Displays and Applications XXV. Visit

An Autostereoscopic Projector Array Optimized for 3D Facial Display

for more information.

What is horizontal parallax?

Horizontal parallax is the perception of 3D depth resulting from the horizontal

motion of the head. As the head moves back and forth, the user sees a new view of

the 3D scene in front of her or him. This provides a strong perception of depth beyond

the binocular depth cue from the viewer's two eyes.

What does autostereoscopic mean? What does automultiscopic mean?

Autostereoscopic means that a display can generate multiple views simultaneously

so a viewer can see a 3D image without the need for stereo 3D glasses. Automultiscopic

is a related word, which means that multiple viewers can see the 3D image without the

need for stereo 3D glasses.

Is this system the same as the CNN's Hologram shown by Wolf Blitzer during the

2008 presidential campaign?

No. Our system produces a real 3D image you can actually see, whereas CNN's

"hologram" was purely a visual effect. To the home viewer, CNN's anchor Wolf Blitzer

appeared to be looking at three-dimensional images of guests Jessica Yellin

and Will.i.am, but this was a video overlay effect done for the home audience with

no actual 3D display technology involved. In CNN's studio, Blitzer was actually

staring across empty space toward a standard 2D flat panel television. The CNN

system used an array of multiple cameras to film the guests from multiple viewpoints

to create a novel viewpoint that matched the studio cameras. For more information

on the 2008 CNN capture process, visit

(link).

How is this display different from the Tupac performance at Coachella in 2012?

The Tupac performance did not use a 3D display, but a clever optical illusion

known as "Pepper's ghost." Pepper's ghost dates back to the 16th century and uses

a semi-reflective screen to make an image appear to float in front of a background

screen. The floating scene is simply a reflection of a hidden room or display that

is out of the audience's view. At Coachella, a semi-transparent screen was used to

reflect a 2D projected image so that it could be seen by the audience. The graphics

themselves were created by the special effects company Digital Domain. For more

information on the creation of the Tupac performance, visit

(link).

How did the digital Morgan Spurlock know how to respond to each question?

We conducted an hour long interview of Morgan Spurlock answering a range of

questions about technology, the future, and his family. As thorough as we were,

there was no way to capture every possible question that could be potentially

asked of Morgan. Morgan's conversation with his digital self was then scripted

and tightly edited to create a natural conversation. If the real Morgan had asked

a question outside the small recorded domain, we would have played a clip of

digital Morgan saying, "I don't know how to answer that question".

We are currently undergoing a more extensive interview process, with subject

interviews spanning up to 20 hours. We aim to delve deeper in capturing a subject's

life story and experiences with the eventual ability for the subject's digital

version to respond to a much larger scope of questions. The USC ICT Natural

Language Group is further developing technology that automatically parses spoken

questions a user asks the display and searches for the best possible recorded

answer within the video database. More information on the Natural Language Group

can be found here.

What other applications are there for this display?

While the display has many applications, from video games to medical

visualization, we are currently working on a much larger project to record

the 3D testimonies of Holocaust survivors. This project, "New Dimensions in

Testimony" or NDT, is a collaboration between the USC Shoah Foundation and

the USC Institute for Creative Technologies, in partnership with exhibit design

firm Conscience Display. NDT combines ICT's Light Stage technology with natural

language processing to allow users to engage with the digital testimonies

conversationally. NDT's goal is to develop interactive 3D exhibits in which

learners can have simulated educational conversations with survivors though

the fourth dimension of time. Years from now, long after the last survivor has

passed on, the New Dimensions in Testimony project can provide a path to enable

youth to listen to a survivor and ask their own questions directly, encouraging

them, each in their own way, to reflect on the deep and meaningful consequences

of the Holocaust. NDT follows the age-old tradition of passing down lessons

through oral storytelling, but with the latest technologies available.