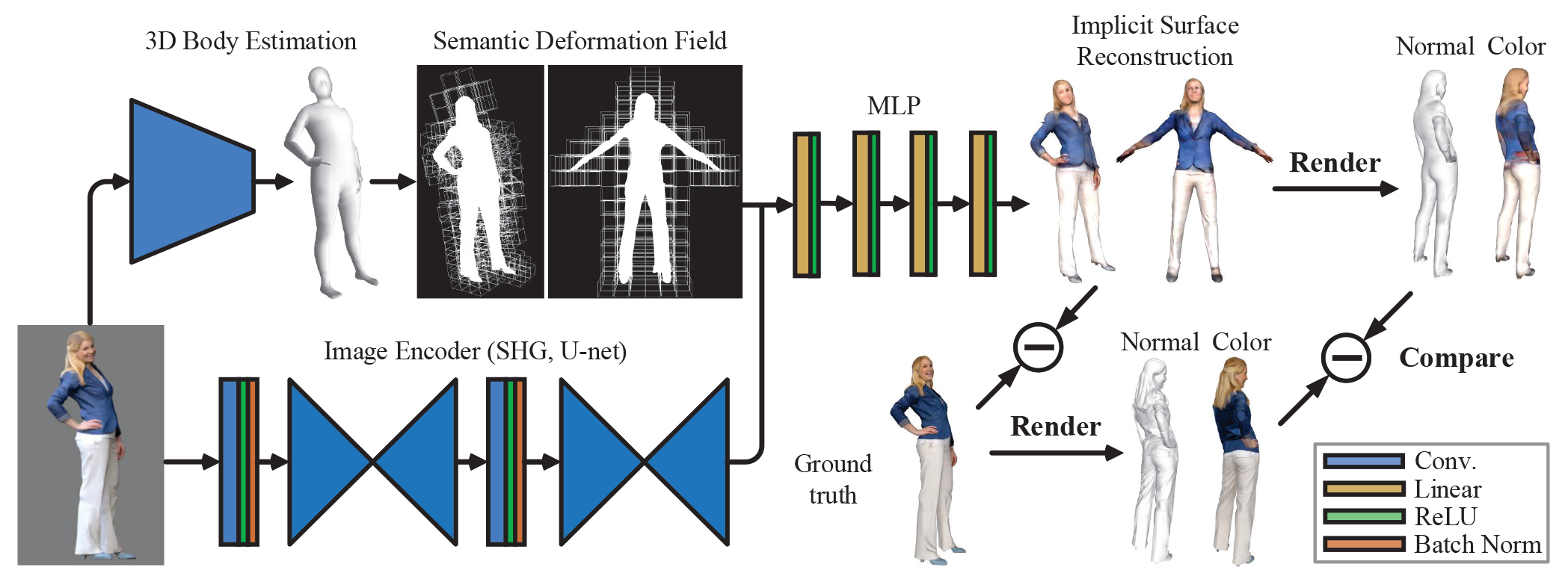

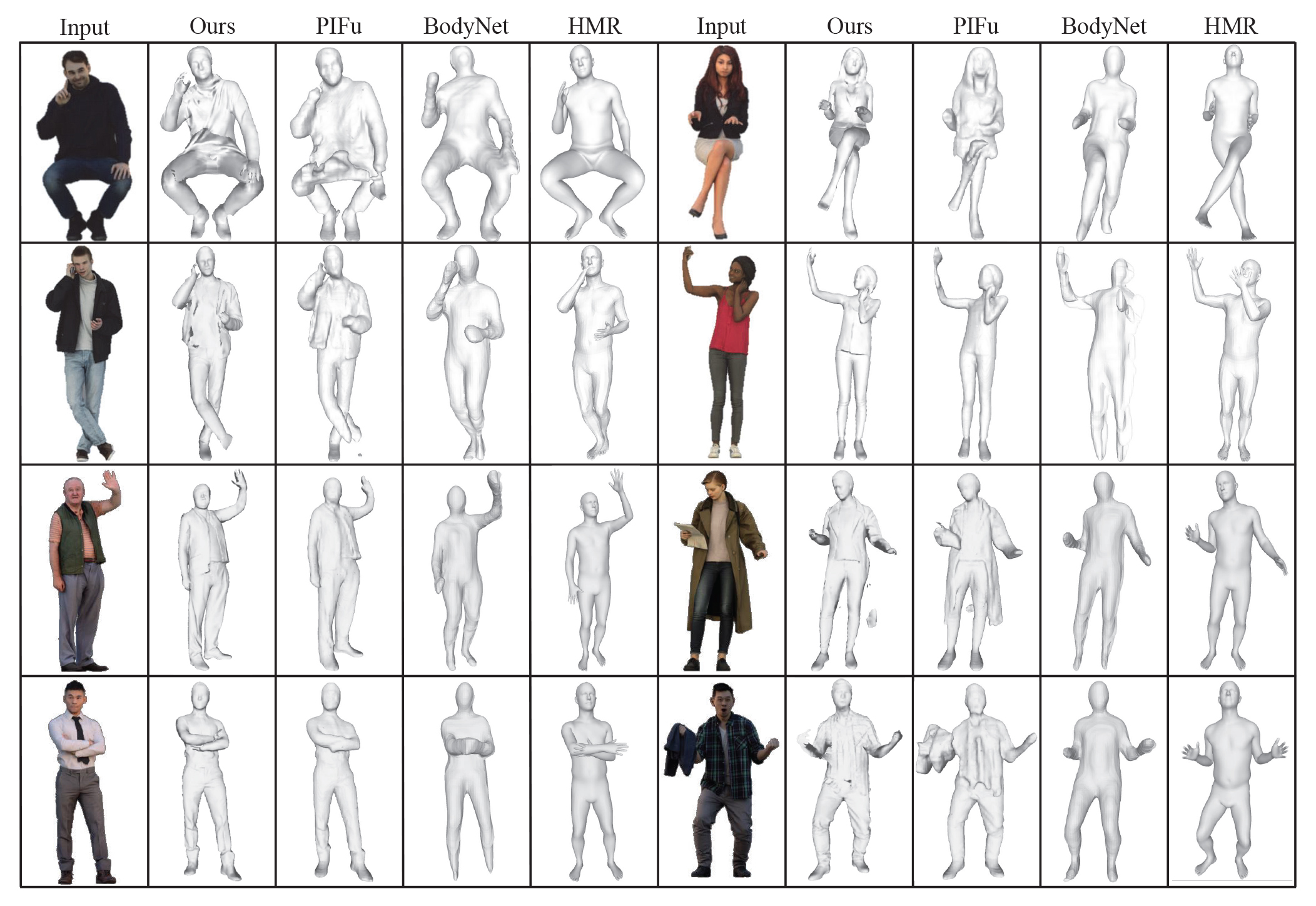

In this paper, we propose ARCH (Animatable Reconstruction of Clothed Humans), a novel end-to-end framework for accurate reconstruction of animation-ready 3D clothed humans from a monocular image. Existing approaches to digitize 3D humans struggle to handle pose variations and recover details. Also, they do not produce models that are animation ready. In contrast, ARCH is a learned pose-aware model that produces detailed 3D rigged full-body human avatars from a single unconstrained RGB image. A Semantic Space and a Semantic Deformation Field are created using a parametric 3D body estimator. They allow the transformation of 2D/3D clothed humans into a canonical space, reducing ambiguities in geometry caused by pose variations and occlusions in training data. Detailed surface geometry and appearance are learned using an implicit function representation with spatial local features. Furthermore, we propose additional per-pixel supervision on the 3D reconstruction using opacity-aware differentiable rendering. Our experiments indicate that ARCH increases the fidelity of the reconstructed humans. We obtain more than 50% lower reconstruction errors for standard metrics compared to state-of-the-art methods on public datasets. We also show numerous qualitative examples of animated, high-quality reconstructed avatars unseen in the literature so far.

The main contributions are threefold: 1) we introduce the Semantic Space (SemS) and Semantic Deformation Field (SemDF) to handle implicit function representation of clothed humans in arbitrary poses, 2) we propose opacity-aware differentiable rendering to refine our human representation via Granular Render-and-Compare, and 3) we demonstrate how reconstructed avatars can directly be rigged and skinned for animation. In addition, we learn per-pixel normals to obtain high-quality surface details, and surface albedo for relighting applications.

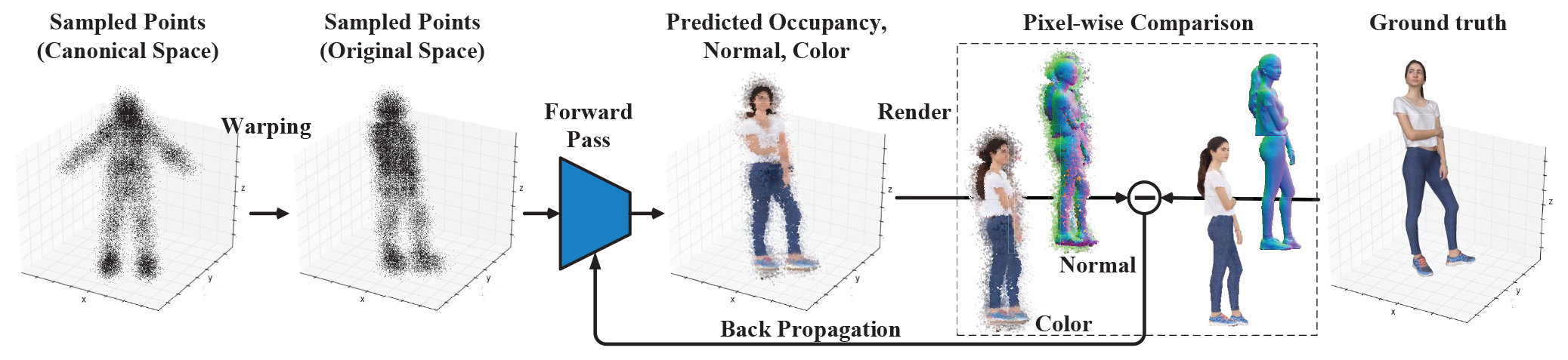

For inference, we take as input a single RGB image representing a human

in an arbitrary pose, and run the forward

model as described in Sec. 3.2 and Fig. 2. The network outputs a densely

sampled occupancy field over the canonical

space from which we use the Marching Cube algorithm [30]

to extract the isosurface at threshold 0.5. The isosurface

represents the reconstructed clothed human in the canonical

pose. Colors and normals for the whole surface are also inferred

by the forward pass and are pixel-aligned to the input

image (see Sec. 3.2). The human model can then be transformed to

its original pose R by LBS using SemDF and

per-point corresponding skinning weights W as defined in

Sec. 3.1.

Furthermore, since the implicit function representation

is equipped with skinning weights and skeleton rig, it can

naturally be warped to arbitrary poses. The proposed endto-end

framework can then be used to create a detailed 3D

avatar that can be animated with unseen sequences from a

single unconstrained photo (see Fig. 5).

In this paper, we propose ARCH, an end-to-end framework to reconstruct clothed humans from unconstrained photos. By introducing the Semantic Space and Semantic Deformation Field, we are able to handle reconstruction from arbitrary pose. We also propose a Granular Renderand-Compare loss for our implicit function representation to further constrain visual similarity under randomized camera views. ARCH shows higher fidelity in clothing details including pixel-aligned colors and normals with a wider range of human body configurations. The resulting models are animation-ready and can be driven by arbitrary motion sequences. We will explore handling heavy occlusion cases with in-the-wild images in the future.